Methods and instruments to assess learning outcomes in master's degrees. Analysis of teachers’ perception of their evaluative practice

DOI:

https://doi.org/10.5944/educxx1.33443Keywords:

higher education, assessment, student assessment, performance assessment, summative assessmentAbstract

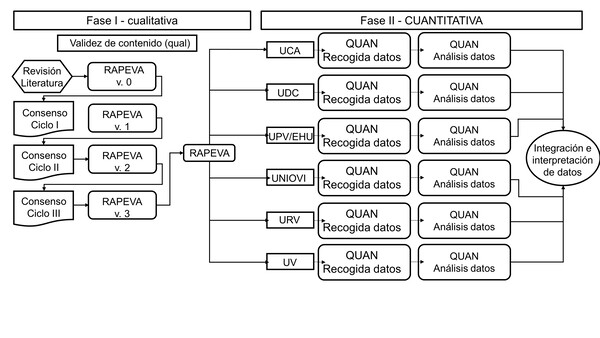

Previous studies on the assessment methods and instruments used in higher education have revealed that the final exam has been widely used as the main source of assessment. Advances in knowledge of assessment processes have shown the need to have a greater breadth and diversity of methods and instruments that allow the collection of thorough and valid information on which to base judgments about the level of learning in students. Within the framework of the FLOASS Project, this study has been carried out in order to explore the perception that teachers have of their assessment practice. A mixed methodology has been used, through an exploratory sequential design, which has allowed to gather the perception of 416 professors from six universities belonging to different autonomous communities, who completed the RAPEVA questionnaire – Self-report of the teaching staff on their practice in the learning outcomes assessment. Among the most widely used methods, participation, problem solving tests, performance tests, digital objects or multimedia presentations and projects and rubrics or evaluative arguments are highlighted among the assessment instruments. The greatest differences were found depending on the university, the field of knowledge or the degree of security and satisfaction with the assessment system. In the case of gender or experience, differences are small or non-existent. Future lines of research that enable a better understanding of assessment practice in higher education are provided.

Downloads

References

Anderson, M. J. (2017). Permutational Multivariate Analysis of Variance (PERMANOVA). Wiley StatsRef: Statistics Reference Online, 1–15. https://doi.org/10.1002/9781118445112.stat07841

Biggs, J. (14-15 de mayo de 2015). Assessment in a constructively system. [Ponencia de congreso]. International Conference Assessment for Learning in Higher Education 2015, Hong Kong, China.

Biggs, J., & Tang, C. (2011). Teaching for quality learning at university. What the students does (4th ed.). McGraw-Hill-SRHE & Open University Press.

Boud, D. (2020). Challenges in reforming higher education assessment: a perspective from afar. RELIEVE, 26(1), Artículo M3. https://doi.org/10.7203/relieve.26.1.17088

Boud, D. (2022). Assessment-as-learning for the development of students’ evaluative judgement. En Z. Yan, & L. Yang (Eds), Assessment as learning. Maximising opportunities for student learning and achievement (pp. 25–37). Routledge.

Brown, S., & Pickford, R. (2013). Evaluación de habilidades y competencias en Educación Superior. Narcea.

Creswell, J. W. (2015). A concise introduction to mixed methods research. SAGE Publications.

Dochy, F. (2009). The edumetric quality of new modes of assessment: Some issues and prospect. En G. Joughin (Ed.), Assessment, learning and judgement in higher education (pp. 85–114). Springer Science & Business Media B.V.

European Centre for Development of Vocational Training. (2014). Terminology of European education and training policy. A selection of 130 key terms. Publications Office of the European Union.

Hair, J. F., Hult, G. T. M., Ringle, C. M., & Sarstedt, M. (2022). A primer on partial least squares structural equation modeling (PLS-SEM) (3rd ed.). SAGE Publications.

Henseler, J. (2021). Composite-based structural equation modeling. Analyzing latent and emergent variables. Guilford Press.

Hwang, H., & Takane, Y. (2015). Generalized structured component analysis: A component-based approach to structural equation modeling. CRC Press.

Ibarra-Sáiz, M.S., & Rodríguez-Gómez, G. (19-21 de junio de 2019). FLOASS - Learning outcomes and learning analytics in higher education: An action framework from sustainable assessment. [Póster]. XIX Congreso Internacional de Investigación Educativa. Investigación comprometida para la transformación social, Madrid, España.

Ibarra-Sáiz, M.S., & Rodríguez-Gómez, G. (2010). Aproximación al discurso dominante sobre la evaluación del aprendizaje en la universidad. Revista de Educación, (351), 385–407.

Ibarra Saiz, M. S., & Rodríguez Gómez, G. (2014). Modalidades participativas de evaluación: Un análisis de la percepción del profesorado y de los estudiantes universitarios. Revista de Investigación Educativa, 32(2), 339-361. http://dx.doi.org/10.6018/rie.32.2.172941

Ibarra-Sáiz, M.S., & Rodríguez-Gómez, G. (2020). Evaluando la evaluación. Validación mediante PLS-SEM de la escala ATAE para el análisis de tareas de evaluación. RELIEVE, 26(1), Artículo M4. https://doi.org/10.7203/relieve.26.1.17403

Ibarra-Sáiz, M.S., Rodríguez-Gómez, G., & Boud, D. (2020a). Developing student competence through peer assessment: the role of feedback, self-regulation and evaluative judgement. Higher Education, 80(1), 137–156. https://doi.org/10.1007/s10734-019-00469-2

Ibarra-Sáiz, M.S., Rodríguez-Gómez, G., Boud, D., Rotsaert, T., Brown, S., Salinas Salazar, M. L., & Rodríguez Gómez, H. M. (2020b). El futuro de la evaluación en educación superior. RELIEVE, 26(1), Artículo M1. https://doi.org/10.7203/relieve.26.1.17323

Ibarra-Sáiz, M.S., Rodríguez-Gómez, G., & Boud, D. (2021). The quality of assessment tasks as a determinant of learning. Assessment & Evaluation in Higher Education, 46(6), 943–955. https://doi.org/10.1080/02602938.2020.1828268

JASP Team. (2022). JASP (Version 0.16.1). https://jasp-stats.org/

Johnson, R. L., & Morgan, G. B. (2016). Survey scales. A guide to development, analysis, and reporting. The Guilford Press.

Lukas, J. F., Santiago, K., & Murua, H. (2011). Unibertsitateko ikasleen ikaskuntzara bideratutako ebaluazioa. Tantak, 23(1), 77-97.

Lukas, J.F., Santiago, K., Lizasoain, L., & Etxeberria, J. (2017). Percepciones del alumnado universitario sobre la evaluación. Bordón, 69(1), 103-122. https://doi.org/10.13042/Bordon.2016.43843

Mateo Andrés, J., & Martínez Olmo, F. (2008). La evaluación alternativa de los aprendizajes. Octaedro.

Panadero, E., Fraile, J., Fernández Ruiz, J., Castilla-Estévez, D., & Ruiz, M. A. (2019). Spanish university assessment practices: examination tradition with diversity by faculty. Assessment & Evaluation in Higher Education, 44(3), 379–397. https://

doi.org/10.1080/02602938.2018.1512553

R Core Team. (2021). R: A language and environment for statistical computing. R Foundation for Statistical Computing. https://www.r-project.org/

Rodríguez-Gómez, G., & Ibarra-Sáiz, M. S. (Eds.). (2011). e-Evaluación orientada al e-Aprendizaje estratégico en Educación Superior. Narcea.

Rodríguez-Gómez, G., Ibarra-Sáiz, M. S., & García-Jiménez, E. (2013). Autoevaluación, evaluación entre iguales y coevaluación: conceptualización y práctica en las universidades españolas. Revista de Investigacion en Educación, 11(2), 198–210.

Sadler, D.R. (2016). Three in-course assessment reforms to improve higher education learning outcomes. Assessment & Evaluation in Higher Education, 41(7), 1081–1099. https://doi.org/10.1080/02602938.2015.1064858

Yan, Z., & Boud, D. (2022). Conceptualising assessment-as-learning. En Z. Yan & L. Yang (Eds.), Assessment as learning. Maximising opportunities for student learning and achievement (pp. 11–24). Routledge.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2022 María Soledad Ibarra-Sáiz, Gregorio Rodríguez-Gómez, José Francisco Lukas-Mujika, Alaitz Santos-Berrondo

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.

Educación XX1 is published under a Creative Commons Attribution-NonCommercial 4.0 (CC BY-NC 4.0)