Estudios e investigaciones

A digital world toolkit: enhancing teachers' metacognitive strategies for student digital literacy development

Herramientas para un mundo digital: mejorando estrategias metacognitivas docentes para desarrollar la alfabetización digital del alumnado

A digital world toolkit: enhancing teachers' metacognitive strategies for student digital literacy development

RIED-Revista Iberoamericana de Educación a Distancia, vol. 27, núm. 2, 2024

Asociación Iberoamericana de Educación Superior a Distancia

Esta obra está bajo una Licencia Creative Commons Atribución 4.0 Internacional.

How to cite: Pereles, A., Ortega-Ruipérez, B., & Lázaro, M. (2024). A digital world toolkit: enhancing teachers' metacognitive strategies for student digital literacy development. [Herramientas

para un mundo digital: mejorando estrategias metacognitivas docentes para desarrollar la alfabetización digital del alumnado]. RIED-Revista Iberoamericana

de Educación a Distancia, 27(2), pp. 267-294. https://doi.org/10.5944/ried.27.2.38798

Abstract: The exploration of the relationship between the use of metacognitive strategies and instructional improvement among teachers, with a particular focus on students' media literacy and information management forms the basis for this research study. The study involved 252 teachers enrolled in the master's programme in educational technology at an online university. To assess teaching styles and the integration of the Internet in the classroom, a quantitative methodology was used through the PISA 2018 questionnaire. The results indicate the reliability of the instrument in measuring digital literacy across the seven dimensions addressed. In addition, the Metadig tool is used to measure the metacognitive component in relation to media literacy. It is observed that its application stimulates the acquisition and development of each dimension analysed. Teachers who use metacognitive strategies and promote media literacy enhance the development of digital competence in the classroom. It therefore seems appropriate to integrate well-structured approaches to self-regulated learning through metacognition into teacher education. Metacognition supports effective organisation of learning, control of information and understanding of context, fostering autonomous learning. These efforts promote digital competences in the use of online resources, and ultimately enrich teaching methods and learning strategies.

Keywords: self-regulated learning, metacognitive strategies, computer literacy, cognitive process, learning strategy, distance study.

Resumen: La presente investigación analiza la relación entre el uso de estrategias metacognitivas y la mejora de la instrucción entre los docentes, centrándose especialmente en la alfabetización mediática y la gestión de la información de los estudiantes. Participaron en el estudio 252 profesores matriculados en el programa de máster en tecnología educativa de una universidad en línea. Primero, para evaluar los estilos de enseñanza y la integración de Internet en las aulas, se utiliza una metodología cuantitativa a través del uso del cuestionario PISA 2018. Los resultados indican la fiabilidad del instrumento en la medición de la alfabetización digital a través de las siete dimensiones abordadas (utilizar palabras clave, confiar en información de Internet, interpretar información relevante, comprender consecuencias de compartir datos, utilizar descriptores, detectar información subjetiva, detectar spam). Además, para evaluar el componente metacognitivo en vínculo con la alfabetización mediática, se utiliza la herramienta Metadig. Se observa que su empleo estimula la adquisición y el desarrollo de cada dimensión analizada. Los profesores que utilizan estrategias metacognitivas y promueven la alfabetización mediática mejoran el desarrollo de la competencia digital en el aula. Parece apropiado integrar en la formación del profesorado enfoques bien estructurados del aprendizaje autorregulado a través de la metacognición. La metacognición favorece la organización eficaz del aprendizaje, el control de la información y la comprensión del contexto, fomentando el aprendizaje autónomo. Estos esfuerzos promueven las competencias digitales en el uso de recursos en línea y, en última instancia, enriquecen los métodos de enseñanza y las estrategias de aprendizaje.

Palabras clave: aprendizaje autorregulado, estrategias metacognitivas, alfabetización digital, proceso cognitivo, estrategia de aprendizaje, enseñanza a distancia.

INTRODUCTION

Developing digital competence is a priority in education today. The ability to effectively use digital technologies and access information is essential for students' academic and professional success in an increasingly digitised world ( UNESCO, 2016 ). To meet this challenge, teachers need to play a key role and use effective pedagogical strategies that promote students' digital literacy, as reported in Decision (EU) 2022/2481 of the European Parliament and of the Council setting out the strategic agenda for the Digital Decade to 2030 (European Union, 2021).

There have been numerous frameworks and strategic initiatives to facilitate the acquisition and integration of technological competences, not only for learners, but also for the professional development of educators. Examples include the European Framework for Digital Competence in Education 'DigCompEdu' (Punie & Redecker, 2017) and the European Parliament's (2016) guidelines on lifelong learning. The Digital Competence Framework for Teachers, developed by the National Institute for Educational Technologies and Teacher Training ( INTEF, 2022 ), builds on the European Commission's Digital Education Action Plan (2021-2027) (European Commission, 2021), providing a comprehensive guide for teachers to improve their digital competence and promote the same in students. Accordingly, the PISA 2018 instrument (OECD, 2017, 2019), recognised for its comprehensiveness, assesses a range of digital skills, from the use of search engines to the detection of biased information. Its high reliability underlines its validity and usefulness. The results of PISA 2018 in Reading Skills reveal that one of the variables with the greatest weight in explaining high or low results is the variable of the use of metacognitive strategies (Vázquez-López & Huerta-Manzanilla, 2021).

In this context, the use of metacognitive strategies emerges as a powerful tool for achieving those learning objectives. Metacognitive strategies, as described by Zimmerman and Moylan (2009), involve higher cognitive processes of monitoring, regulating and reflecting on one's own thinking. Those who exercise metacognitive strategies are self-aware of their cognitive processes, recognise their strengths and weaknesses and are able to effectively monitor and regulate their learning, including planning, monitoring and evaluating their performance (Huff & Nietfeld, 2009; Muijs & Bokhove, 2020).

Moreover, metacognition has been identified as a critical factor in teacher professional development, having a significant impact on teaching effectiveness and student learning outcomes (Stephanou & Mpiontini, 2017). Teachers who use metacognitive strategies reflect on their instructional practices, evaluate their efficacy and adapt to respond to students' needs. These strategies promote awareness and control of cognitive processes during lessons (Bannert et al., 2009), which influences students' acquisition and processing of information.

Additionally, teachers' use of metacognitive strategies positively influences students' motivation and engagement (Efklides, 2011; Zimmerman & Moylan, 2009). Research indicates that teachers who promote metacognitive processes and encourage self-regulation in students contribute to improved motivation and academic performance (Kaczkó & Ostendorf, 2023). These strategies also play a key role in the development of critical thinking skills among students (Kaczkó & Ostendorf, 2023), allowing them to critically evaluate information and construct informed arguments. In an attempt to measure these competences, it is noted how the Metadig instrument (Ortega-Ruipérez & Castellanos, 2021) offers an innovative digital solution to foster self-regulation of learning. It clearly identifies the metacognitive strategies of planning, monitoring and self-assessment and empowers participants to manage and improve their own learning process during the master’s programme ( Ortega-Ruipérez & Castellanos, 2023).

In the current educational environment, understanding the importance of metacognitive strategies for promoting digital competence is essential. Teachers employ these strategies to guide students in acquiring digital skills, analysing information and solving problems (Dignath & Büttner, 2018). While promoting self-regulated learning, metacognitive strategies empower students to effectively manage their learning, set goals, monitor progress and make necessary adjustments (Núñez et al., 2022; Wigfield & Cambria, 2010), thereby improving learning outcomes and competences (Panadero, 2017).

Self-regulation and the development of digital competence are closely connected and enhanced through metacognitive strategies (Coll, et al., 2023; Sonnenberg & Bannert, 2019). Media literacy and information processing are integral components of digital skills. Teachers use metacognitive strategies to train students on critically evaluating media messages, understanding data collection and use, and protecting their privacy online (Zheng et al., 2016). Moreover, these strategies support effective communication, collaboration and digital citizenship among students (Villaplana et al., 2022; Suárez & González, 2021), promoting creativity and critical thinking skills (Zimmerman, 2008).

Educators also play a critical role as facilitators for the development of metacognitive skills, promoting autonomy and self-reflection in students (Dobber et al., 2017; Wall & Hall, 2016). Understanding why teachers need advanced metacognitive expertise is essential, as research has established a connection between metacognition and improved academic outcomes, justifying its integration into initial teacher education (Duffy et al., 2009; Perry et al., 2019). Although experienced teachers may sometimes find it difficult to recognise the benefits of metacognitive strategies, such as sharing teaching experiences, the integration of these resources is essential to improve the learning process (Dignath & Büttner, 2018; Halamish, 2018).

Moreover, promoting digital literacy is important, as it involves empowering students with self-regulation skills for information seeking, evaluation and online safety (De Bruyckere et al., 2016). Teachers, again, have an important place as they need effective pedagogical strategies to teach students how to use the Internet appropriately (De Bruyckere et al., 2016).

In view of the changing social and educational scenario, it is essential to prepare teachers in terms of information literacy (Pérez-Escoda, 2017; Monereo, 2011). This includes improving their ability to search for and evaluate information on the Internet, while fostering critical thinking and awareness of online risks (García-Llorente et al., 2020; McDougall et al., 2019). The integration of metacognitive strategies in teacher education is supported as it contributes to the development of information literacy within a more comprehensive digital literacy framework (Arjaya et al., 2013; Salcedo et al., 2022). Even with the acknowledged importance of metacognitive strategies and media and information literacy, there is limited research exploring their interrelationship. It is essential to understand whether teachers skilled in the use of metacognitive strategies can guide students in critically evaluating online information and encourage self-reflection on Internet use.

MATERIALS AND METHOD

The present study has a dual intention. The main purpose of the research is to provide evidence about the reliability of the instrument, to determine whether the selected PISA items are effective in adequately assessing the implementation of good pedagogical practices related to the use of the Internet, with the aim of promoting information literacy.

Secondly, the aim is to check if the employment of a digital tool to facilitate the use of metacognitive strategies for the self-regulation of learning in online teacher training (Metadig), improves the ability to teach media and information literacy. This capacity is part of area 6: Developing students' digital competence, of the Digital Competence in Teaching framework, proposed by the National Institute of Educational Technologies and Teacher Training (INTEF, 2022). To carry out this research, a quasi-experimental design was chosen due to the possibility given to the participants to decide voluntarily whether they wanted to use the tool in question or not. Given these circumstances, it was impracticable to randomly assign them to the research groups.

In order to control the independent variable, an experimental group and a control group were established. In this way, a comparison of the improvements obtained in both groups was made to determine the presence of significant differences and validate whether these improvements can be attributed to the use of the tool under study.

In addition, to ensure a more rigorous control over the independent variable, a pre-post design was implemented, in which data were collected on the dependent variables both before and after the intervention. In this way, we sought to examine the significance of the improvements observed as a consequence of the use of the tool, in comparison with the initial level of application of the metacognitive strategies.

Participants

The study population comprises all students enrolled in the master’s program in Educational Technology for Teachers, amounting a total of 650 individuals. The sample selected to carry out the research is composed of 252 students, which represents 38.7 % of the program population. This proportion is considered adequate for making inferences and generalizing the results obtained. The sampling method used in this study is non-probabilistic, as students have the option to participate voluntarily in the research. Consequently, it is a convenience sample.

Regarding the distribution of the participants in the research groups, 42 % of them (105 participants) used the application on a regular basis, while the remaining 58 % (147 participants) only used it during the first few days. These figures have allowed the formation of two study groups: the first one composed of those who used the application on a regular basis (experimental group), and the second one composed of those who did not use it frequently (control group).

Instruments and Materials

In reference to the instruments and materials used, an intervention was carried out through a four-hour training course focused on the use and teaching of self-regulated learning. Participants were offered the voluntary option of using the digital tool Metadig (Ortega-Ruipérez & Castellamos, 2021) in order to self-regulate their own learning process in the master’s program. This tool has been designed in a way that clearly distinguishes the three types of metacognitive strategies: planning, monitoring, and self-assessment. Metadig has progressed through iterations based on learner needs, usability and expert validation, proving its effectiveness in improving comprehension, planning and critical thinking (Ortega-Ruipérez & Castellanos, 2023). During the first week, participants were asked to plan their goals and determine the approach they would follow to achieve them. Over the next 15 weeks, corresponding to the duration of the four-month period, the application allowed them to manage and monitor their weekly progress. For the last week, the application included a self-assessment function that allowed them to review which goals were the most difficult to achieve, so that they could spend more time reviewing them.

In this case, the PISA 2018 test (OECD, 2017, 2019) was used; a questionnaire divided into 7 items, each of which is a dimension. It addresses the use of keywords in search engines (item 1), trust in internet information (item 2), as well as the relevance of internet content for schoolwork (item 3). The last four dimensions are the understanding of the consequences of making information public online (item 4), the use of short descriptors below links in search results (item 5), the detection of subjective or biased information (item 6) and, finally, the detection of phishing or spam emails (item 7). The test-retest reliability analysis with our sample yielded a Cronbach's alpha of 0.936.

Procedure and data analysis

Throughout the second term of the master's, an intervention was carried out in which students were contacted a week before the start of the master’s course. Through various channels, such as e-mails, notifications in the virtual classroom and telephone calls by tutors, they were informed about the possibility of receiving training to improve their study habits in the master’s degree.

The training was divided into two phases. The first part consisted of a two-hour session held during the first week of the term. This lesson explained the concept of self-regulated learning and the importance of using strategies to self-regulate the learning process. Emphasis was put on metacognitive strategies and it was shown how they could apply them if they decided to use the Metadig tool. The use of this tool was presented as optional and framed within the research project. Participants were therefore divided into the experimental group or the control group, depending on whether they chose to use the tool or not. To participate in the study, they were informed about the need to complete a questionnaire that included items from the 2018 PISA test within the Program for International Student Assessment (OECD, 2017), as well as other scales that were part of the research project. Those who could not attend the live session had the option to watch it later for one week. The questionnaire was also available for one week to collect pre-test responses.

Over the following 15 weeks, corresponding to the duration of the four-month period, the students used the tool autonomously, organizing their learning process as they saw fit. They were reminded to use the tool twice during the term, specifically in weeks five and ten. Before the exams, they were also reminded how they could use the self-assessment function of the tool to improve their study.

At the end of the term, the second training session, also lasting two hours, was conducted. This time, the focus was on how participants could teach self-regulation learning strategies to their own students. The first half hour of the session was spent reflecting together on how the tool had helped them and whether they felt that such strategies could be useful for their students. The session ended by thanking them for their participation and again asking them to complete the questionnaire in order to obtain the post-test responses. Similar to the first meeting, those who were not able to attend live had the opportunity to watch it afterwards and answer the questionnaire during the following week.

To address the first objective, an approach called Structural Equation Modelling (SEM) is used. This multivariate analysis technique allows us to test models that propose causal relationships between variables (Ruiz et al., 2010). Unlike regression models, SEM is more flexible and focuses on hypothesis confirmation rather than exploration (Escobedo et al., 2016). SEM is preferable to Confirmatory Factor Analysis (CFA) because it extends the possibility of relationships between latent variables and encompasses both a measurement model and a structural model (Schreiber, 2021). The purpose of SEM is to validate a theory describing the relationships between variables by using empirical data to confirm a theoretical model based on real information.

In this initial stage, various statistics are examined to assess the fit of the models. On the one hand, the Comparative Fit Index (CFI) and Tucker-Lewis Index (TLI) are used, which are improved compared to the Normalized Fit Index (NFI) and are less sensitive to model complexity. Both indices must be greater than 0.9 to consider that the model has a good fit. On the other hand, the ratio of the chi-square to the degrees of freedom (X²/df) is used as an index, which must be less than 0.5. Two indices are also used to analyse the residuals: the Root Mean Square Error of Approximation (RMSEA), which indicates how well the model fits the reference population, with lower values indicating a better fit, and is considered acceptable if the value is between 0.05 and 0.08. In addition, the Standardized version of the Root Mean Square Residual (SRMR) summarizes the differences between the observed and estimated variance-covariance matrices, and if the mean standardized residuals exceed 0.1, this indicates a fit problem. Once the model that best fits the data is selected, information on item loadings on the factors is incorporated and reliability is assessed using different statistics. All these data are interpreted together.

In addition, the reliability of the test was verified using the study sample by analysing Cronbach's alpha coefficient for all the items as well as for the items of each dimension. In this way, it was possible to confirm that the measuring instrument used was suitable for assessing media literacy in the context of this sample (α=0.936).

In the second place, two variables were created for each dimension, one based on the pretest items and the other based on the posttest items, calculating the value of the items corresponding to each dimension of study. Finally, it was examined whether the distribution of the sample corresponded to a normal distribution for each dimension. In cases where the dimension followed a normal distribution, a Generalized Linear Model with Gaussian distribution was applied. In cases where the distribution was not normal, a Generalized Linear Model was also used, but the distribution was adjusted to a Gamma distribution for those variables that did not have symmetry. In both cases, the impact of belonging to one group or the other was analysed, i.e., whether the participants had used the Metadig tool to employ metacognitive self-regulation strategies, considering this variable as a factor in the test.

This impact was measured, depending on the group, in each dimension of the study, taking the post-test variable of each dimension as the dependent variable, with the aim of assessing its impact after the intervention. To assess the real impact, free of the pretest effect, the pretest variable of the dimension was included as a covariate.

RESULTS

Objective 1. Quality of the instrument

Initially, it was necessary to examine the model fit indices (Table 1) in order to identify which showed the most optimistic results. While analysing the model, it was observed that the chi-square ratio (divided by the degrees of freedom (χ2/df), the CFI, TLI, SRMR and RMSEA), provided satisfactory results that support the adequate adjustment of the model. In particular, the results obtained for the CFI, TLI and SRMR are notably excellent.

| X²/df | CFI | TLI | SRMR | RMSEA | |

| 1Factor Model | 5.521 | 0.993 | 0.989 | 0.026 | 0.134 |

Consequently, the results indicate an adequate adjustment of the model. In this sense, it can be affirmed that the instrument created using the PISA items is able to measure pedagogical practices related to the development of information literacy competence.

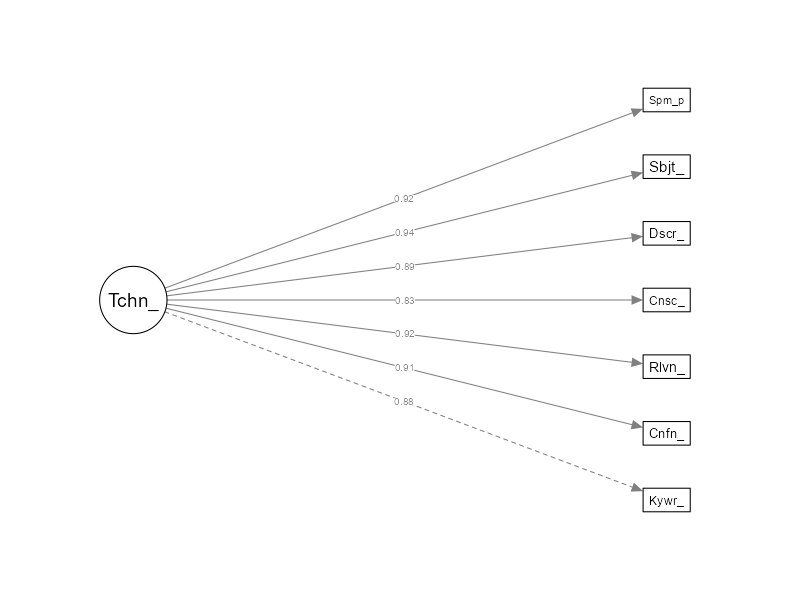

Likewise, a very satisfactory fit of the items in each dimension is observed, according to the statistics presented in Table 2. The coefficient of determination ( R2) provides information on the percentage of variance of the factor that is explained by each item, while the standardized beta coefficient reveals its factorial influence.

| R² | β | z | p | ||

| Teaching Practices | Keywords | 0.773 | 0.879 | ||

| Trust | 0.830 | 0.911 | 58.8 | <0.001 | |

| Relevant info. | 0.849 | 0.921 | 56.1 | <0.001 | |

| Consequences | 0.684 | 0.827 | 35.3 | <0.001 | |

| Descriptors | 0.786 | 0.887 | 49.5 | <0.001 | |

| Subjective info. | 0.876 | 0.936 | 62.7 | <0.001 | |

| Spam | 0.838 | 0.915 | 65.1 | <0.001 |

Furthermore, special attention was paid to the reliability indices (Table 3) to ensure the quality of the model, and highly favorable results were obtained. Both the ordinal alpha and the omega 1 exceeded the value of 0.9, which is very positive. In addition, the average variance explained (AVE), representing the mean of the R2 coefficients of the items in each dimension, reached a value of 0.8. It should be noted that a value of 0.5 is sufficient to consider that the model is adequate, so these results reaffirm the robustness and reliability of the model.

| Variable | α | Ordinal α | ω₁ | ω₂ | ω₃ | AVE |

| Teaching Practices | 0.947 | 0.965 | 0.948 | 0.948 | 0.955 | 0.805 |

Figure 1 shows the beta standardized coefficients representing the factor weights of each item.

Factorial weights (beta) on the factor

Objective 2. Dimensions

In the subsequent section, the differences between dimensions are analysed to understand whether the use of a digital tool to facilitate the use of metacognitive strategies for self-regulation of learning in online teacher training enhances the ability to teach media and information literacy.

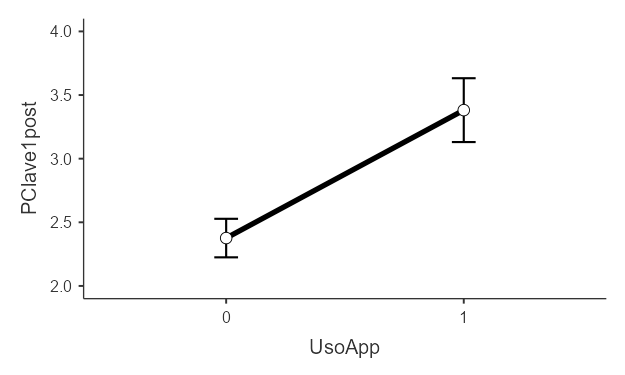

How to use keywords when using a search engine

According to the results from the Shapiro-Wilk analysis, it was verified that the data did not follow a normal distribution (p=<.001). Therefore, Generalized Linear Models using the Gamma distribution were applied. First, the model's measure of fit was assessed using the coefficient of determination R2. In this case, the model suggests that 25.6 % of the variability in terms of teaching improvement in the use of keywords in search engines can be explained using metacognitive strategies through Metadig.

Analysing the results for the estimated parameters of the model (Table 4), the predictors (group and pretest) are statistically significant (p<0.001). When controlling the effect of the pretest, it is found that the mean value of those who did not use Metadig to improve their teaching of media and information literacy is 2.38 points out of 4. On the contrary, those who did use Metadig regularly could obtain 1 additional point in teaching management, thus reaching a score of 3.38 points.

The differences between the groups, after adjusting for the pretest effect, were confirmed as significant by the Bonferroni test (Table 5). These differences can be graphically seen in Figure 2.

| 95 % Confidence Interval | ||||||||

| Names | Effect | Estimate | SE | Lower | Upper | z | p | |

| (Intercept) | (Intercept) | 2.376 | 0.0772 | 2.233 | 2.532 | 30.76 | < .001 | |

| UsoApp1 | 1 - 0 | 1.005 | 0.1466 | 0.726 | 1.299 | 6.86 | < .001 | |

| PClave1 | PClave1 | 0.418 | 0.0631 | 0.293 | 0.547 | 6.63 | < .001 | |

| Comparison | ||||||

| UsoApp | UsoApp | Difference | SE | z | pbonferroni | |

| 0 | - | 1 | -1.01 | 0.147 | -6.86 | <.001 |

Plots estimated marginal means by group for How to use keywords when using a search engine

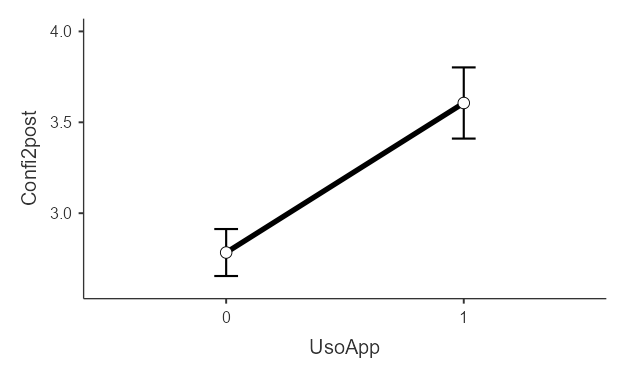

How to decide whether to trust information from the Internet

Once the Shapiro-Wilk test was examined, it was observed that it did not follow a normal distribution (p=<.001), so the Generalized Linear Models test with the Gamma distribution was used. Firstly, the model fit measure with R2 was observed, which suggests that 28.2 % of the improvement in media literacy in deciding whether to trust information on the Internet could be explained by the regular use of metacognitive strategies through Metadig.

The results suggest that the predictors are also statistically significant (p<0.001). According to the parameter estimates in Table 6, when considering the effect of the pretest on the sample, those individuals who did not use Metadig to apply digital literacy would obtain a mean score of 2.78 out of 4. On the other hand, those who used the tool would achieve 3.61 points, which represents an increase of 0.82 points compared to the group that did not use this tool. The Bonferroni test (Table 7) confirms that this difference between the groups is statistically significant, as shown in Figure 3.

| 95 % Confidence Interval | |||||||

| Names | Effect | Estimate | SE | Lower | Upper | z | p |

| (Intercept) | (Intercept) | 2.784 | 0.0659 | 2.660 | 2.916 | 42.23 | < .001 |

| UsoApp1 | 1 - 0 | 0.822 | 0.1174 | 0.595 | 1.057 | 7.01 | < .001 |

| Confi2 | Confi2 | 0.457 | 0.0517 | 0.347 | 0.563 | 8.82 | < .001 |

| Comparison | ||||||

| UsoApp | UsoApp | Difference | SE | z | pbonferroni | |

| 0 | - | 1 | -0.822 | 0.117 | -7.01 | < .001 |

Plots estimated marginal means by group for How to decide whether to trust information from the Internet

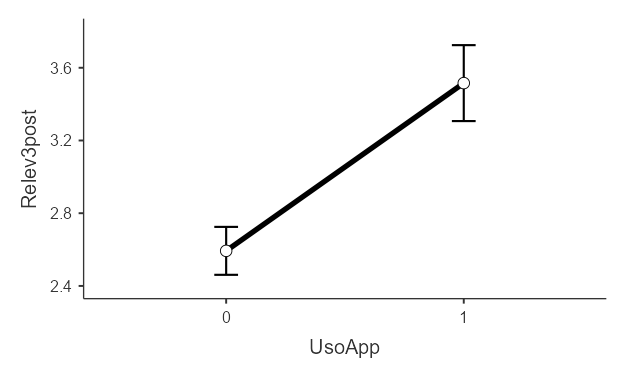

How to compare different web pages and decide relevant information

Analysis of the Shapiro-Wilk test showed that the data did not follow a normal distribution (p=<.001). Therefore, the Generalized Linear Models test with a Gamma distribution was applied. Firstly, the measure of model fit was evaluated by means of the R2 coefficient, indicating a 28 % variability in teacher improvement in media literacy, specifically in the ability to compare different web pages and determine the relevance of information for schoolwork, which could be explained by the regular use of metacognitive strategies through Metadig.

The obtained results reveal that the predictors are also statistically significant (p<0.001). According to the parameter estimates in Table 8, when considering the effect of the pretest on the sample, those individuals who do not use Metadig to apply digital literacy would obtain a mean score of 2.59 out of 4. On the other hand, those using this tool would achieve a score of 3.52 points, which represents an increase of 0.92 points compared to the group not using Metadig. The Bonferroni test (Table 9) confirms that this difference between the groups is statistically significant, as shown in Figure 4.

| 95 % Confidence Interval | |||||||

| Names | Effect | Estimate | SE | Lower | Upper | z | p |

| (Intercept) | (Intercept) | 2.593 | 0.0673 | 2.466 | 2.728 | 38.55 | < .001 |

| UsoApp1 | 1 - 0 | 0.923 | 0.1237 | 0.683 | 1.171 | 7.46 | < .001 |

| Relev3 | Relev3 | 0.423 | 0.0555 | 0.309 | 0.536 | 7.62 | < .001 |

| Comparison | ||||||

| UsoApp | UsoApp | Difference | SE | z | pbonferroni | |

| 0 | - | 1 | -0.923 | 0.124 | -7.46 | < .001 |

Plots estimated marginal means by group for How to compare different web pages and decide relevant information

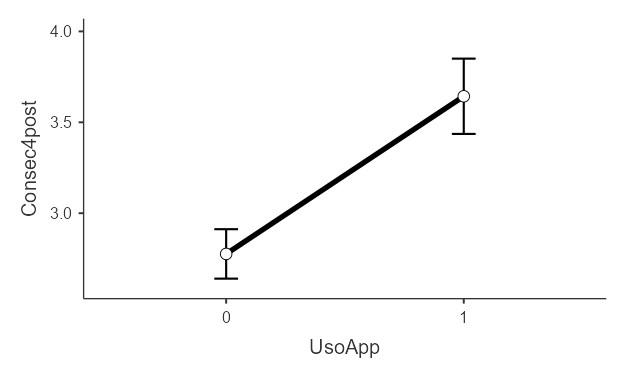

Understanding the consequences of making information publicly available online on social media

While analyzing the data obtained with the Shapiro-Wilk test, it was observed that the data do not follow a normal distribution (p=<.001). Therefore, the Generalized Linear Models test with a Gamma distribution was used. Thus, the measure of model fit was assessed using the R2 coefficient, which indicates that 27.1 % of the variability in teacher improvement in media literacy related to understanding the consequences of making information public on social networks could be explained by the regular use of metacognitive strategies through the tool.

These results shows that the predictors were also statistically significant (p<0.001). According to the parameters estimated in Table 10, when considering the effect of the pretest on the sample, it is observed that those individuals who did not use Metadig to apply digital literacy would obtain a mean score of 2.78 out of 4. On the other hand, those who used this tool would achieve a score of 3.64 points, which implies an increase of 0.87 points compared to the group that did not use this tool. The Bonferroni test (Table 11) confirms in a statistically significant way that there is a difference between the groups, as can be seen in Figure 5 below.

| 95 % Confidence Interval | |||||||

| Names | Effect | Estimate | SE | Lower | Upper | z | p |

| (Intercept) | (Intercept) | 2.776 | 0.0694 | 2.646 | 2.915 | 40.01 | < .001 |

| UsoApp1 | 1 - 0 | 0.867 | 0.1229 | 0.627 | 1.114 | 7.06 | < .001 |

| Consec4 | Consec4 | 0.497 | 0.0494 | 0.391 | 0.601 | 10.06 | < .001 |

| Comparison | ||||||

| UsoApp | UsoApp | Difference | SE | z | pbonferroni | |

| 0 | - | 1 | -0.867 | 0.123 | -7.06 | < .001 |

Plots estimated marginal means by group to understand the consequences of making information publicly available online on social media

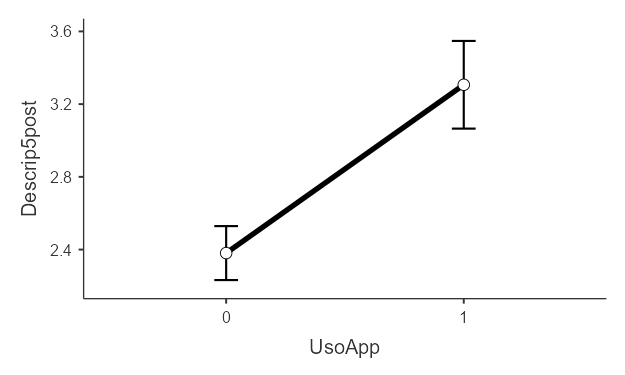

How to use the short description below the links in the list of results of a search

After carrying out the analysis with the Shapiro-Wilk test, it was observed that the data do not follow a normal distribution (p=.002), therefore, it was decided to use the Generalized Linear Models test with a Gamma distribution. Firstly, the fit of the model was evaluated using the R2 coefficient, suggesting that 23.6 % of the variability in teacher improvement in terms of media literacy, specifically in the ability to use the brief description that appears under the links in the list of search results, could be explained by the regular use of metacognitive strategies through Metadig.

The findings reveal that the predictive variables also demonstrate statistical significance (p<0.001). According to the parameter estimates in Table 12, when considering the effect of the pretest on the sample, those individuals who did not use Metadig to apply digital literacy would obtain a mean score of 2.38 out of 4. In contrast, those who used this tool would achieve a score of 3.31 points, which implies an increase of 0.93 points compared to the group that does not use this tool. The Bonferroni test (Table 13) supports the evidence that this difference between the groups is statistically significant, as shown in Figure 6.

| 95 % Confidence Interval | |||||||

| Names | Effect | Estimate | SE | Lower | Upper | z | p |

| (Intercept) | (Intercept) | 2.381 | 0.0757 | 2.240 | 2.534 | 31.44 | < .001 |

| UsoApp1 | 1 - 0 | 0.926 | 0.1425 | 0.654 | 1.211 | 6.50 | < .001 |

| Descrip5 | Descrip5 | 0.387 | 0.0680 | 0.254 | 0.524 | 5.69 | < .001 |

| Comparison | ||||||

| UsoApp | UsoApp | Difference | SE | z | pbonferroni | |

| 0 | - | 1 | -0.926 | 0.142 | -6.50 | < .001 |

Plots estimated marginal means by group for How to use the short description below the links in the list of results of a search

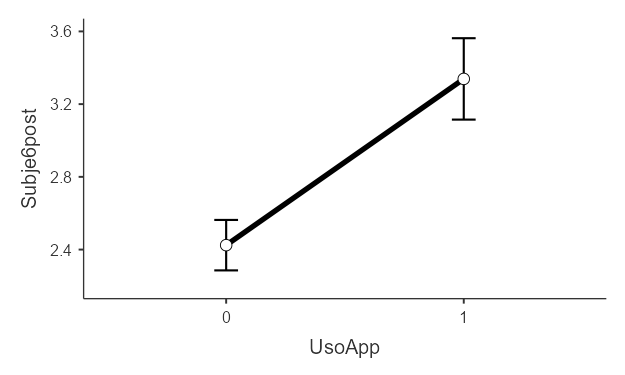

How to detect whether the information is subjective or biased

The results of the Shapiro-Wilk test were evaluated and it was observed that the data do not follow a normal distribution (p=.002). It was therefore decided to use the Generalized Linear Models test with a Gamma distribution. In the first stage, the ability of the model to fit the data was assessed using the R2 coefficient. The results show that 25.9 % of the improvement in teacher competence in media literacy, specifically in the ability to identify whether information is subjective or biased, could be explained by the regular application of metacognitive strategies through the tool.

In addition, statistically significant results (p<0.001) were obtained for the predictors. The parameter estimates in Table 14 indicate that, when considering the effect of the pretest on the sample, those individuals who did not use Metadig to promote digital literacy would obtain a mean score of 2.42 out of 4. On the other hand, those who made use of this tool would achieve a score of 3.34 points, which represents an increase of 0.92 points compared to the group that did not use Metadig. The results of the Bonferroni test (Table 15) significantly confirm that there is a statistical difference between the groups, as shown in Figure 7 below.

| 95 % Confidence Interval | |||||||

| Names | Effect | Estimate | SE | Lower | Upper | z | p |

| (Intercept) | (Intercept) | 2.424 | 0.0708 | 2.291 | 2.568 | 34.24 | < .001 |

| UsoApp1 | 1 - 0 | 0.915 | 0.1327 | 0.660 | 1.180 | 6.89 | < .001 |

| Subje6 | Subje6 | 0.352 | 0.0608 | 0.235 | 0.472 | 5.79 | < .001 |

| Comparison | ||||||

| UsoApp | UsoApp | Difference | SE | z | pbonferroni | |

| 0 | - | 1 | -0.915 | 0.133 | -6.89 | < .001 |

Plots estimated marginal means by group for How to detect whether the information is subjective or biased

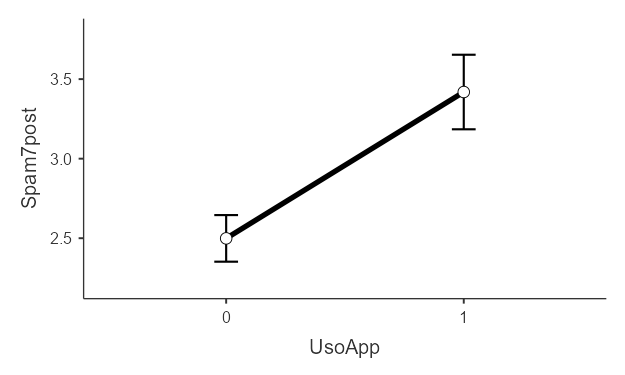

How to detect phishing or spam emails

From the results obtained in the Shapiro-Wilk test, it was found that the data do not follow a normal distribution (p=<.001). Therefore, Generalized Linear Models analysis with the Gamma distribution was employed. First, the model's measure of fit was assessed using the coefficient of determination R2. In this case, the model suggests that 22 % of the variability in terms of teaching improvement in detecting phishing or spam emails can be explained using metacognitive strategies through the instrument.

Analysis of the results from the estimated parameters of the model (Table 16) shows that the predictors (group and pretest) are statistically significant ( p<0.001). When the effect of the pretest was controlled for, it was found that the mean value of those who did not use Metadig to improve their media and information literacy teaching was 2.50 points out of 4. On the contrary, those who used Metadig regularly could score an additional 0.92 points in teaching management, thus reaching a score of 3.42 points.

The differences between the groups, once adjusted for the pretest effect, were confirmed as significant by the Bonferroni test (Table 17). These differences can be seen graphically in Figure 8.

| 95 % Confidence Interval | |||||||

| Names | Effect | Estimate | SE | Lower | Upper | z | p |

| (Intercept) | (Intercept) | 2.499 | 0.0747 | 2.359 | 2.650 | 33.44 | < .001 |

| UsoApp1 | 1 - 0 | 0.920 | 0.1386 | 0.651 | 1.200 | 6.64 | < .001 |

| Spam7 | Spam7 | 0.369 | 0.0584 | 0.257 | 0.483 | 6.33 | < .001 |

| Comparison | ||||||

| UsoApp | UsoApp | Difference | SE | z | pbonferroni | |

| 0 | - | 1 | -0.920 | 0.139 | -6.64 | < .001 |

Plots estimated marginal means by group for How to detect phishing or spam

According to the results in Table 18, a consistent utilization of metacognitive strategies, via the Metadig tool, significantly contributes to enhancing the teaching of various aspects of media literacy. To be precise, it accounts for 28.2 % of the improvement in teaching about the reliability of information hosted on the Internet, followed by 27.4 % in discriminating the relevance of sources and 27.1 % in understanding the consequences of sharing information on social networks. In addition, the use of Metadig explains approximately 25 % of the variability between individuals who use the tool and those who do not. These differences are particularly notable in the dimensions related to the recognition of subjective information (25.9 %), the use of keywords (25.6 %) and the use of search engine descriptors (25.6 %). Furthermore, there is a significant advancement, albeit to a lesser extent, in the dimension of detecting phishing or spam in emails (22 %). It is important to note that significant differences were found between the groups in all cases, controlling for the pretest effect and assuming equality of the groups.

| Dimension | R2 | Mean G-0 | Mean G-1 | Difference |

| Keywords | 0.256 | 2.38 | 3.38 | 1 |

| Trust | 0.282 | 2.38 | 3.61 | 0.822 |

| Relevant info. | 0.274 | 2.59 | 3.52 | 0.923 |

| Consequences | 0.271 | 2.78 | 3.64 | 0.867 |

| Descriptors | 0.236 | 2.38 | 3.31 | 0.926 |

| Subjective info. | 0.259 | 2.42 | 3.34 | 0.915 |

| Spam | 0.220 | 2.50 | 3.42 | 0.920 |

Analyzing the mean differences between the groups in each dimension and controlling for the pretest effect, it was observed that the greatest difference was found in the instruction concerning the use of keywords (1 point), followed by the use of descriptors (0.93) and the evaluation of the relevance of the information available on the Internet (0.92). At the same level, we found very high differences in the detection of spam (0.92) and subjective information (0.92). All these variations showed a range equal to or close to one point on the four-point scale used for the measurements. In addition, substantial differences were found in the following dimensions: understanding the implications of sharing information on social networks (0.87) and identifying reliable information (0.82), between those who used and those who did not use Metadig.

CONCLUSIONS

The study has two main objectives: to assess the reliability of the selected PISA 2018 items to measure information literacy teaching practices and to find out whether the use of a digital tool such as Metadig, which develops metacognitive strategies for the self-regulation of learning, improves the teaching of media and information literacy. Therefore, seven dimensions have been analysed according to Pérez-Escoda et al. (2019) for teachers to master in order to address the teaching of media and information literacy (Gutiérrez-Martín et al., 2022).

First, the effectiveness of the PISA 2018 instrument in assessing information literacy pedagogical practices is confirmed, supporting previous research (García-Llorente et al., 2020). Furthermore, it highlights the importance of integrating metacognitive strategies into teacher education, which is considered crucial for improving information literacy (Zimmerman & Moylan, 2009). Validity and reliability analyses of the instrument support its use in measuring information literacy skills (OECD, 2017, 2019).

Furthermore, the impact of the use of Metadig on media and information literacy teaching is analysed. Significant improvement is observed in several key dimensions. Regarding the use of keywords in search engines, teachers develop strategies to conduct more effective searches, promoting students' adaptability and comprehension (Reisoğlu et al., 2020; Zhou, 2023). The tool also strengthens the ability to assess the reliability of online information, which is essential in a context of misinformation (Muijs & Bokhove, 2020). It also facilitates the comparison of information sources and the determination of their relevance, promoting critical thinking and informed decision-making (Bannert et al., 2009; Kaczko & Ostendorf, 2023).

Teachers using Metadig are found to have a better understanding of the consequences of sharing information online, encouraging strategies for responsible use of social networks (De Bruyckere et al., 2016). They improve their ability to use short descriptors in search results, enhancing the analysis and synthesis of information (Dignath & Büttner, 2018). As a result, teachers more accurately detect biased information, fostering the development of pedagogical strategies that promote learner autonomy (Dobber et al., 2017). Finally, they are better able to identify phishing or spam emails, increasing online safety awareness and developing protection strategies (Zheng et al., 2016).

These results highlight the effectiveness of both the PISA 2018 instrument and Metadig in improving the teaching of media and information literacy. After reviewing each one of the dimensions, the study reveals that the use of Metadig, a digital tool that promotes metacognitive strategies, has a positive impact on various dimensions of media and information literacy teaching. This is evidenced by improving the use of keywords in search engines, developing the ability to evaluate online information, improving the determination of the relevance of content for schoolwork, understanding the implications of sharing information online, strengthening information analysis and synthesis skills, promoting critical evaluation of the veracity of information, and raising awareness of online safety and identifying risks such as phishing emails or spam.

DISCUSSION

Finally, the present research provides significant evidence on the influence of pedagogical practices on the development of information literacy competence, supporting previous findings (García-Llorente et al., 2020). Furthermore, it shows that the use of digital tools such as Metadig improves dimensions related to information literacy competence (Azevedo & Witherspoon, 2009).

The integration of information technologies with metacognitive strategies in teacher education is crucial for developing media literacy (Beetham & Sharpe, 2013). This approach benefits students to identify reliable sources and make informed decisions (Kaczkó & Ostendorf, 2023; Villaplana et al., 2022). Therefore, teacher training in the use of metacognitive strategies is recommended to improve their self-regulated learning skills.

Furthermore, the importance of continuous training in media and information literacy for teachers is highlighted (Cabrero-Almenara & Palacios-Rodríguez, 2020). The study provides empirical evidence of the role of digital tools in the development of metacognition in education, thus strengthening disciplinary practice in media and information literacy.

Limitations of the study

There are some limitations to this study. On the one hand, the evaluation was conducted in the short term, which prevents us from fully exploring the long-term impact of Metadig use on digital literacy skills (Area & Pessoa, 2012; Azevedo & Witherspoon, 2009). In addition, variables such as prior experience with technology (Martin & Madigan, 2006) or level of familiarity with metacognitive strategies were not considered, which could influence the results (Bannert et al., 2009).

Limitations include self-selection bias, which makes generalization difficult. Future research should address these issues to better understand the impact of Metadig on metacognition and media literacy.

Future lines of research

Further research on Metadig suggests longitudinal studies to measure its impact over time. It is interesting to compare Metadig with other tools to assess its effectiveness in improving metacognitive strategies and digital literacy.

It could also be relevant to explore the link between innovative pedagogical practices and information literacy using PISA data. In addition, investigating moderating variables such as intrinsic motivation and adaptability may shed light on the effectiveness of the tool. This type of research helps to understand the benefits of interventions in diverse educational and social settings.

REFERENCES

Area, M., & Pessoa, T. (2012). De lo sólido a lo líquido: Las nuevas alfabetizaciones ante los cambios culturales de la Web 2.0. Comunicar, 38, 13-20.

Arjaya, I. B. A., Hermawan, I. M. S., Ekayanti, N. W., & Paraniti, A. A. I. (2023). Metacognitive contribution to biology pre-service teacher’s digital literacy and self-regulated learning during online learning. International Journal of Instruction, 16(1). 455-468. https://doi.org/10.29333/iji.2023.16125a

Azevedo, R., & Witherspoon, A. M. (2009). Self-regulated learning with hypermedia. In D. J. Hacker, J. Dunlosky & A. C. Graesser (Eds.), Handbook of metacognition in education (pp. 278-298). Mahwah, Erlbaum.

Bannert, M., Hildebrand, M., & Mengelkamp, C. (2009). Effects of a metacognitive support device in learning environment. Computers in Human Behavior, 25(4), 829-835. https://doi.org/10.1016/j.chb.2008.07.002

Beetham, H., & Sharpe, R. (2013). Rethinking pedagogy for a digital age: Designing for 21st century learning. Routledge. https://doi.org/10.4324/9780203078952

Cabero-Almenara, J., & Palacios-Rodríguez, A. (2020). Marco Europeo de Competencia Digital Docente «DigCompEdu». Traducción y adaptación del cuestionario «DigCompEdu Check-In». EDMETIC, 9(1), 213-234. https://doi.org/10.21071/edmetic.v9i1.12462

Coll Salvador, C., Díaz Barriga Arcedo, F., Engel Rocamora, A., & Salina Ibáñez, J. (2023). Evidencias de aprendizaje en prácticas educativas mediadas por tecnologías digitales. RIED-Revista Iberoamericana de Educación a Distancia, 26(2), pp. 9-25. https://doi.org/10.5944/ried.26.2.37293

De Bruyckere, P., Kirschner, P. A., & Hulshof, C. D. (2016). Technology in Education: What Teachers Should Know. American Educator, 40(1), 12-18.

Decision (EU) 2022/2481 of the European Parliament and of the Council of 14 December 2022 establishing the Digital Decade Policy Programme 2030. Official Journal of the European Union, 323, 4-26. http://data.europa.eu/eli/dec/2022/2481/oj

Dignath, C., & Büttner, G. (2018). Components of fostering self-regulated learning among students: A meta-analysis on intervention studies at primary and secondary school level. Metacognition and Learning, 13(2), 127-151. https://doi.org/10.1007/s11409-018-9181-x

Dobber, M., Zwart, R., Tanis, M., & van Oers, B. (2017). Literature review: The role of the teacher in inquiry-based education. Educational Research Review, 22, 194-214. https://doi.org/10.1016/j.edurev.2017.09.002

Duffy, G. G., Miller, S., Parsons, S., & Meloth, M. (2009). Teachers as metacognitive professionals. In D. J. Hacker, J. Dunlosky & A. C. Graesser (Eds.), Handbook of metacognition in education (pp. 240-256). Routledge/Taylor & Francis Group.

Efklides, A. (2011). Interactions of metacognition with motivation and affect in self-regulated learning: The MASRL model. Educational Psychologist, 46, 6-25. https://doi.org/10.1080/00461520.2011.538645

Escobedo Portillo, M. T., Hernández Gómez, J. A., Estebané Ortega, V., & Martínez Moreno, G. (2016). Modelos de ecuaciones estructurales: Características, fases, construcción, aplicación y resultados. Ciencia & trabajo, 18(55), 16-22. https://doi.org/10.4067/S0718-24492016000100004

European Commision. (2021). Plan de Acción de Educación Digital (2021-2027). https://education.ec.europa.eu/es/focus-topics/digital-education/action-plan

European Union. (2021). Europe's Digital Decade. https://digital-strategy.ec.europa.eu/en/policies/europes-digital-decade

García-Llorente, H. J., Martínez-Abad, F., & Rodríguez-Conde, M. J. (2020). Evaluación de la competencia informacional observada y autopercibida en estudiantes de educación secundaria obligatoria en una región española de alto rendimiento PISA. Revista Electrónica Educare, 24(1), 24-40. https://doi.org/10.15359/ree.24-1.2

Gutiérrez-Martín, A., Pinedo-González, R., & Gil-Puente, C. (2022). Competencias TIC y mediáticas del profesorado.: Convergencia hacia un modelo integrado AMI-TIC / ICT and Media competencies of teachers. Convergence towards an integrated MIL-ICT model. Comunicar, 70, 21-33. https://doi.org/10.3916/C70-2022-02

Halamish, V. (2018). Pre-service and in-service teachers’ metacognitive knowledge of learning strategies. Frontiers in Psychology, 9, 2152. https://doi.org/10.3389/fpsyg.2018.02152

Huff, J. D., & Nietfeld, J. L. (2009). Using strategy instruction and confidence judgments to improve metacognitive monitoring. Metacognition and Learning, 4, 161-176. https://doi.org/10.1007/s11409-009-9042-8

INTEF. (2022). Marco de Referencia de la Competencia Digital Docente Actualizado. https://intef.es/

Kaczkó, É., & Ostendorf, A. (2023). Critical thinking in the community of inquiry framework: An analysis of the theoretical model and cognitive presence coding schemes. Computers & Education, 193, 104662. https://doi.org/10.1016/j.compedu.2022.104662

Martin, A., & Madigan, D. (2006). Digital literacies for learning. Facet Publishing.

McDougall, J., Brites, M. J., Couto, M. J., & Lucas, C. (2019). Digital literacy, fake news and education. Cultura y Educación, 31(2), 203-212. https://doi.org/10.1080/11356405.2019.1603632

Monereo, C. (Coord.). (2011): Internet y competencias básicas. Aprender a colaborar, a comunicarse, a participar, a aprender. Editorial Graó.

Muijs, D., & Bokhove, C. (2020). Metacognition and Self-Regulation: Evidence Review. Education Endowment Foundation. https://educationendowmentfoundation.org.uk/evidence-summaries/evidence-reviews/ metacognition-and-self-regulation-review/

Núñez, J. C., Tuero, E., Fernández, E., Añón, F. J., Manalo, E., & Rosário, P. (2022). Efecto de una intervención en estrategias de autorregulación en el rendimiento académico en Primaria: estudio del efecto mediador de la actividad autorregulatoria. Revista de Psicodidáctica, 27(1). https://doi.org/10.1016/j.psicod.2021.09.001

OECD. (2017). Teacher questionnaire for PISA 2018. Test language teacher. International option. OECD. https://bit.ly/3ZltsTY

OECD. (2019). PISA 2018 assessment and analytical framework. PISA. OECD Publishing. https://doi.org/10.1787/b25efab8-en

Ortega-Ruipérez, B., & Castellanos, A. (2021). Design and development of a digital tool for metacognitive strategies in self-regulated learning. In EduLearn 21. 13th International Conference on Education and New Learning Technologies Online Conference. 5-6 July, 2021 (pp. 1203-1211). IATED Academy. https://doi.org/10.21125/edulearn.2021.0300

Ortega-Ruipérez, B., & Castellanos, A. (2023). Guidelines for instructional design of courses for the development of self-regulated learning for teachers. South African Journal of Education, 43(3). https://doi.org/10.15700/saje.v43n3a2202

Panadero, E. (2017). A review of self-regulated learning: Six models and four directions for research. Frontiers in Psychology, 8, 422. https://doi.org/10.3389/fpsyg.2017.00422

Pérez-Escoda, A. (2017). Alfabetización mediática, TIC y competencias digitales. UOC.

Pérez-Escoda, A., García-Ruiz, R., & Aguaded, I. (2019). Dimensions of digital literacy based on five models of development / Dimensiones de la alfabetización digital a partir de cinco modelos de desarrollo. Culture and Education, 31(2), 232-266. https://doi.org/10.1080/11356405.2019.1603274

Perry, J., Lundie, D., & Golder, G. (2019). Metacognition in schools: what does the literature suggest about the effectiveness of teaching metacognition in schools? Educational Review, 71(4), 483-500. https://doi.org/10.1080/00131911.2018.1441127

Punie, Y., & Redecker, C. (2017). European Framework for the Digital Competence of Educators: DigCompEdu. https://doi.org/10.2760/178382

Reisoğlu, İ., Toksoy, S. E., & Erenler, S. (2020). An analysis of the online information searching strategies and metacognitive skills exhibited by university students during argumentation activities. Library & Information Science Research, 42(3). https://doi.org/10.1016/j.lisr.2020.101019

Ruiz, M., Pardo, A., & San Martín, R. (2010). Modelos de ecuaciones estructurales. Papeles del Psicólogo, 31(1).

Salcedo, D. M., Ibarra, K. A., Ceballos, V. I., & Pazmiño, E. S. (2022). Digital meanings: multimodal communications and multiliteracy. Recimundo. Revista Científica Mundo de la Investigación y el Conocimiento, 3, 147-154. https://doi.org/10.26820/recimundo/6

Schreiber, J. B. (2021). Issues and recommendations for exploratory factor analysis and principal component analysis. Research in Social and Administrative Pharmacy, 17(5), 1004-1011. https://doi.org/10.1016/j.sapharm.2020.07.027

Sonnenberg, C., & Bannert, M. (2019). Using Process Mining to examine the sustainability of instructional support: How stable are the effects of metacognitive prompting on self-regulatory behavior? Computers in Human Behavior, 96, 259-272, https://doi.org/10.1016/j.chb.2018.06.003

Stephanou, G., & Mpiontini, M. (2017) Metacognitive Knowledge and Metacognitive Regulation in Self-Regulatory Learning Style, and in Its Effects on Performance Expectation and Subsequent Performance across Diverse School Subjects. Psychology, 8, 1941-1975. https://doi.org/10.4236/psych.2017.812125

Suárez, E. J., & González, L. M. (2021). Puntos de encuentro entre pensamiento crítico y metacognición para repensar la enseñanza de ética. Sophia, Colección de Filosofía de la Educación, 30, 181-202. https://doi.org/10.17163/soph.n30.2021.06

UNESCO. (2016). Tecnologías digitales al servicio de la calidad educativa: una propuesta de cambio centrada en el aprendizaje para todos. https://unesdoc.unesco.org/ark:/48223/pf0000245115

Vázquez-López, V., & Huerta-Manzanilla, E. L. (2021). Factors Related with Underperformance in Reading Proficiency, the Case of the Programme for International Student Assessment 2018. European Journal of Investigation in Health, Psychology and Education, 11(3), 813-828. https://doi.org/10.3390/ejihpe11030059

Villaplana V., Torrado, S., & Ródenas, G. (2022). Capítulo 4. Alfabetización mediática e innovación docente. Hacia un enfoque crítico de la docencia de la Comunicación desde las Pedagogías visuales. Espejo De Monografías De Comunicación Social, 9, 59-74. https://doi.org/10.52495/c4.emcs.9.p95

Wall, K., & Hall, E. (2016). Teachers as metacognitive role models. European Journal of Teacher Education, 39(4), 403-418. https://doi.org/10.1080/02619768.2016.1212834

Wigfield, A., & Cambria, J. (2010). Students’ achievement values, goal orientations, and interest: Definitions, development, and relations to achievement outcomes. Developmental Review, 30(1). https://doi.org/10.1016/j.dr.2009.12.001

Zheng, L., Li, X., & Chen, F. (2016). Effects of a mobile self-regulated learning approach on students’ learning achievements and self-regulated learning skills. Innovations in Education and Teaching International, 55(6), 1-9. https://doi.org/10.1080/14703297.2016.1259080

Zhou, M. (2023). Students’ metacognitive judgments in online search: a calibration study. Education and Information Technologies, 28, 2619-2638. https://doi.org/10.1007/s10639-022-11217-y

Zimmerman, B. J. (2008). Investigating Self-Regulation and Motivation: Historical Background, Methodological Developments, and Future Prospects. American Educational Research Journal, 45, 166-183. https://doi.org/10.3102/0002831207312909

Zimmerman, B. J., & Moylan, A. R. (2009). Self-Regulation. Where Metacognition and Motivation Intersect. In D. J. Hacker, J. Dunlosky & A. C. Graesser (Eds.), Handbook of metacognition in education (pp. 278-298). Mahwah, Erlbaum.

Reception: 01 December 2023

Accepted: 29 February 2024

OnlineFirst: 11 April 2024

Publication: 01 July 2024