Estudios

MULTIDIMENSIONAL RESEARCH ON UNIVERSITY ENGAGEMENT USING A MIXED METHOD APPROACH

INVESTIGACIÓN MULTIDIMENSIONAL DEL ENGAGEMENT UNIVERSITARIO A TRAVÉS DE UNA METODOLOGÍA MIXTA

MULTIDIMENSIONAL RESEARCH ON UNIVERSITY ENGAGEMENT USING A MIXED METHOD APPROACH

Educación XX1, vol. 24, no. 2, pp. 65-96, 2021

Universidad Nacional de Educación a Distancia

This work is licensed under Creative Commons Attribution-NonCommercial 3.0 International.

Received: 13 October 2020

Accepted: 31 January 2021

How to reference this article / Cómo referenciar este artículo:

Benito Mundet, H., Llop Escorihuela, E., Verdaguer Planas, M., Comas Matas, J., Lleonart Sitjar, A., Orts Alis, M., Amadó Dodony, A., & Rostan Sánchez, C., (2021). Multidimensional research on university engagement

using a mixed method approach. Educación XX1, 24(2), 65-96. https://doi.org/10.5944/educXX1.28561

Benito Mundet, H., Llop Escorihuela, E., Verdaguer Planas, M., Comas Matas, J., Lleonart Sitjar, A., Orts Alis, M., Amadó Dodony, A., y Rostan Sánchez, C., (2021). Investigación multidimensional del engagement universitario

a través de una metodología mixta. Educación XX1, 24(2), 65-96. https://doi.org/10.5944/educXX1.28561

Abstract: The commitment or academic implication (engagement) of university students has become a fundamental element for their welfare and academic performance and, furthermore, it is also related to their professional future and social commitment. For this reason, the definition of the concept and the provision of assessment strategies and tools are essential to know the learning experiences that lead to enhancing the academic involvement of the students. To develop our research, we have used a mixed quantitative and qualitative methodology: exploratory and confirmatory factor analysis on the one hand, and discussion groups using the nominal groups technique on the other hand. We have set three different objectives: first, to delve into the multidimensional model of the construct; second, to validate a questionnaire that allows for evaluation of the students’ perception of the learning methodologies used in the classroom; and third, to check the manageability of the nominal groups as a qualitative method of analysis. The results demonstrate that our new proposal provides a statistically valid instrument aimed at determining the perceptions of own engagement and an effective, efficient and motivating qualitative method for students. However, regarding the multidimensionality of the construct, contrary to the more accepted theoretical point of view that considers three dimensions of engagement (behaviour, cognition and emotion), our results only reveal two dimensions (cognitive-emotional and behavioural). In the discussion and comments section we give possible explanations for this contradiction.

Keywords: Learner engagement, classroom environment, higher education, perception test.

Resumen: El compromiso o la implicación académica (engagement en inglés) de los estudiantes universitarios se ha convertido en un elemento fundamental de su bienestar y de su rendimiento académico y, además, está muy relacionado también con su futuro profesional y compromiso social. Es por esta razón que la definición de dicho concepto y la disposición de instrumentos de evaluación y estrategias de análisis son imprescindibles para conocer las experiencias de aprendizaje que conducen a mejorar la implicación académica del estudiante. Para el desarrollo de esta investigación hemos utilizado una metodología mixta cuantitativa y cualitativa: análisis factorial exploratorio y confirmatorio, por un lado, y grupos de discusión mediante la técnica de los grupos nominales, por otro. Los objetivos que nos hemos planteado son tres: primero, ahondar en el modelo multidimensional del constructo engagement; segundo, validar un cuestionario que permita evaluar la percepción que tienen los estudiantes de las metodologías de aprendizaje que se utilizan en el aula, y tercero, comprobar la manejabilidad de los grupos nominales como método cualitativo de análisis. Los resultados demuestran que nuestra nueva propuesta ofrece un instrumento estadísticamente válido para determinar las percepciones del propio engagement y un método cualitativo de utilización eficaz, eficiente y motivador para los estudiantes. Sin embargo, respecto a la multidimensionalidad del constructo, y contrariamente al punto de vista teórico más aceptado que considera la existencia de tres dimensiones del engagement (comportamiento, cognición y emoción), nuestros resultados solamente revelan dos dimensiones (cognitivo-emocional y comportamental). En la discusión y comentarios damos posibles explicaciones a dicha contradicción.

Palabras clave: Compromiso de los estudiantes, ambiente de la clase, enseñanza superior, test de percepción.

INTRODUCTION

Universities currently face numerous challenges (Altbach et al., 2009; Collie et al., 2017) including student overcrowding, access to and generation of knowledge through new information and communication technologies, the creation of jobs that require new professional skills and professional mobility (both geographical and between specialities). If we add to this the fixed mindset of universities in adapting to the demands of the labour market and the new professional profiles required by companies, it should come as no surprise that a good number of students are showing signs of disaffection with the academic world. Within this context of universities being in a permanent state of transition, student engagement - and the contradictions and controversies that come with it – take on a greater significance.

The concept of engagement encompasses a broad spectrum of phenomena in education, including other aspects such as connection, commitment, involvement, and students adapting to their educational environment. This would be the reverse of student disconnection, disaffection or alienation from academic affairs. In extreme cases, students will even break off from their studies. In the aforementioned scenario, engagement is considered to be the most appropriate conceptual framework for understanding and preventing school or university dropout (Archambault et al., 2009; Appleton et al., 2008). However, despite a lack of engagement being widely associated with dropout in the literature, we believe it more appropriate to approach the phenomenon from a positive viewpoint: as those factors that provide the right context for students to develop their learning potential (Schnitzler et al., 2020; Zyngier, 2008).

Newmann et al. (1992) highlighted the difficulty in finding an operational definition of engagement. Perhaps this is what makes it such a fragmented concept (Archambault et al., 2009). A definition of engagement will also depend on the goals we establish for its application. If we are only interested in academic performance, some simple behavioural components such as participation in the tasks required by the course and students fulfilling the requirements of the teaching institution may be sufficient for proper engagement (Finn, 1989). However, if the aim is for students to engage in lifelong learning and develop a taste for the search for knowledge and culture, as well as involvement in their social and community context ( Zyngier, 2007), then the definition of engagement must also encompass other components. In line with this, Shernoff (2013) suggested distinguishing between engagement with a lower case ‘e’ and Engagement with a capital ‘E’. This distinction is relevant because, as Zyngier (2008) suggested, engagement may not necessarily be a predictor of academic achievement. In fact, a significant number of excellent students have been found to feel disaffection for formal education, have poor participation beyond strictly academic tasks and have little feeling of belonging to the teaching institution where they study. Schlechty (2002) regarded these students as “ritually engaged” and passively committed.

Some authors have proposed an integrative and multidimensional concept of Engagement (with a capital E) that includes from two to four psychological dimensions involved in this process (Appleton et al., 2008). However, consistent with theory, a tripartite dimensional construct (behaviour, cognition and emotion) is considered the stronger conceptualisation of engagement (Christenson et al., 2012) This multidimensional definition has several advantages. Firstly, a definition of engagement encompasses the different points of view through which students’ relationships with academic institutions have traditionally been channelled (among others, membership, participation, involvement and connection). Secondly, it allows for a developmental approach to the phenomenon. Some studies have shown that engagement varies according to the stages of the life cycle, this depending on bio-psycho-social development and the experience at the educational institution (Fredricks et al., 2004; Lam et al., 2016). Thirdly, it provides for research using empirical studies, the results of which can be used in intervention programmes. Finally, it includes a transactional perspective, whereby engagement emerges from the student’s interaction with the academic environment (Shernoff, 2013).

Each of the three dimensions included in the integrative perspective mentioned above are composed of more specific elements (Appleton et al., 2006). The behavioural dimension includes the observable aspects of engagement: among others, task fulfilment, participation in class, in the institution and in the social environment, work organisation and effort invested in studies. The cognitive dimension refers to the mental resources employed by students in their learning: metacognitive strategies in the learning process (planning, monitoring and evaluation), attention and concentration, sense of belonging to the class group, the profession or the teaching institution, quality and depth of thought, creativity and so on. The emotional dimension refers to the affection that the student feels for the teaching institution, for the academic content and for learning and culture in general. This last element includes motivation, interest, anxiety, pleasure, discomfort and the feeling of connection or belonging.

As mentioned earlier, the concept of engagement is transactional. That is, the characteristics of the behavioural, cognitive and emotional dimensions related to each subject vary by individual and context. In other words, the engagement varies according to the learning environment and the quality of the students’ experience (Shernoff et al., 2016). As Fredricks et al. (2004) suggested, engagement is malleable; that is, it develops in line with the social, contextual and cultural influences exerted upon it and can therefore be changed by means of interventions planned for this purpose. This is despite the fact that many details of how context may influence the dimensions of engagement remain controversial and need more research. In this sense, it is important to measure domain-specific situations, social interactions, methodologies and tasks showing differences in academic engagement across different contexts. Currently, there is an existing body of research that reveals the importance of some of these elements. These make up a framework of complex interactions that comprise socio-political factors (Kahu, 2013; Mcmahon & Portinelli, 2004), resilience to adversities (Rodríguez et al., 2018), family (Veiga et al., 2016), school (Fredricks et al., 2004; Lee & Shute, 2010; Fernández-Zabala et al., 2016), classmates (Lam et al., 2016; Capella et al., 2013) and teachers (Van Uden et al., 2014). Other parameters that are foreseeably important for engagement include the characteristics of tasks that teachers set for their students (Newmann et al., 1992). Therefore, some studies already point in the same direction (Shernoff et al., 2016). Thus, it is important to determine malleable and interactive contextual factors involved in engagement that can be targeted in interventions in order to improve the academic, professional and civic training provided for students (Christenson et al., 2012). Moreover, it is necessary to evaluate the effectiveness and efficiency of teaching interventions and which aspects can be influenced to adapt them to the diverse students and situations found in the classroom.

The specific aim of this study was to design and validate a questionnaire to evaluate students’ perceptions of the engagement generated in university classrooms. In addition, this work also investigates its multidimensional conceptualisation. This has been complemented by a qualitative study on the perceptions of students from different university fields regarding aspects that influence greater engagement in the classroom.

Despite the value that many authors attribute to engagement in relation to achieving academic and professional success, its evaluation continues to pose a challenge (Mandernach, 2015), particularly in university education. Firstly, because there is no consensus on the concept of engagement (Macfarlane & Tomlinson, 2017). A definition of engagement must encapsulate far more than the pleasure or dislike that the student feels for a class, subject, professor or teaching method. Engagement should not refer to professors wanting students to have fun in their classes, but rather wanting them to think, learn deeply and become competent professionals (Barkley, 2010). In accordance with this, an evaluation of engagement should facilitate clarification of its meanings, as well as the components and extension of the dimensions that comprise it. Secondly, evaluating engagement also has the function of recognising the effect of learning ecologies. Teaching activity and the students’ relationship with their immediate environment and the institution will lead to increased or decreased engagement. As previously indicated, engagement is malleable and professors must therefore be able to monitor and evaluate students’ learning experiences so as to recognise which of the strategies they employ are most effective (Wiggins et al., 2017).

Engagement can be evaluated in several different ways. Although questionnaires are the most commonly used method, observation, noting down moments during class, focus groups and even electrophysiological measures have also been used. All have their advantages and disadvantages. For example, observation is more reliable for recording observable behaviours, but does not record unobservable components such as cognitive and emotional elements. Although questionnaires may include all of the required dimensions, the subject may be influenced by social desirability. Applying mobile technology (Martin et al., 2020) or The Experience Sampling Method (Shernoff, 2013) of recording perceptions regarding individual engagement during class is useful, but requires programmable devices. Focus groups provide students with the opportunity to discuss their experience in greater depth. However, they cover little ground and students are inclined to focus on the more observable elements of engagement, such as behavioural and contextual elements, and are negligent with the more psychological ones (Appleton et al., 2008). Psychophysiological records assess the body’s responses throughout the different activities of the class, but cover few subjects. Given these considerations, the present study applies a mixed methodology and proposes a questionnaire validated by means of the nominal group technique (NGT). This method has the advantage of allowing a quick and less-intrusive scrutiny of what the students think. Some examples can be found in Carpio et al (2016) and Duers (2017).

In reference to the questionnaire, two main reasons prompted us to make our own based on current literature. First, because investigating the multidimensionality of the engagement concept was one of the objectives of the study. Second, although the existing questionnaires already cover many aspects of the learning ecosystem, including the institution and the services it offers, the specific learning situations that interact with the dimensions of engagement are not deeply studied. As the first step in a broader project where it was to be used as an instrument for evaluating the learning strategies professors employ in their classes (both traditional and innovative), this task focused exclusively on the class group. We therefore designed our own questionnaire to record the multiple aspects of students’ self-perception. As detailed below, the items include the three individual indicators (emotional, behavioural and cognitive) on the one hand, and those facilitators involved in the individual’s transaction with the learning context (social interactions and external resources) that influence engagement on the other. The former are dimensions that show the degree or level of student engagement with learning, while the latter are factors that influence the strength of engagement (Frederick et al., 2019).

MATERIALS AND METHODS

This section details the procedure employed in the study, including the quantitative and qualitative methods that comprise the mixed methodology used.

Mixed methodology

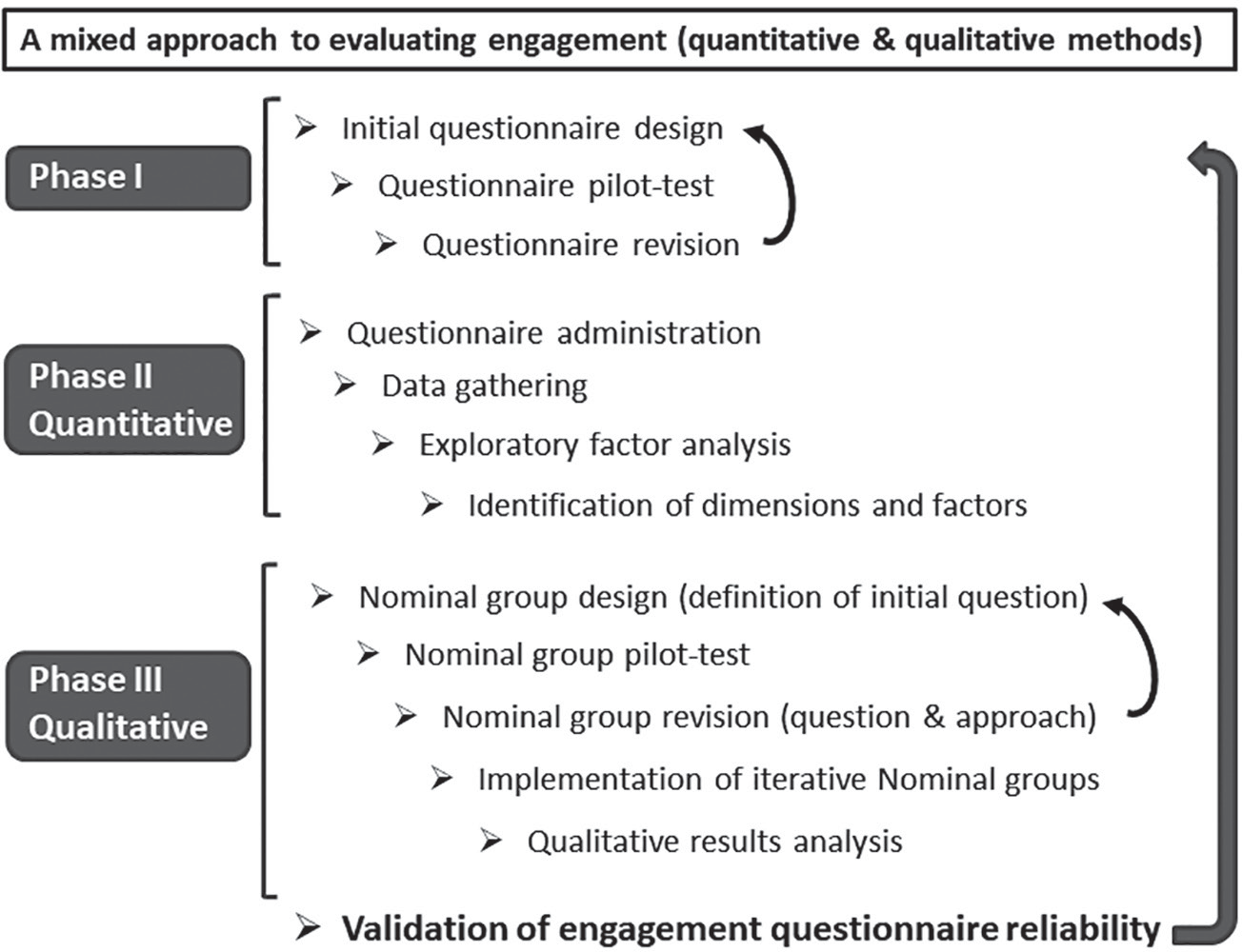

A mixed methodology (see figure 1) was employed to reflect the complexity of engagement as a concept and to obtain more complete, comprehensive and in-depth information (Hammersley, 2007; Philips, 2009). A questionnaire was used as the quantitative instrument, and a process known as nominal group as the qualitative one.

Figure 1

Overview of the development process for validating a questionnaire of University engagement. The process involves three phases: survey design, quantitative analysis and qualitative analysis

The mixed method has been used following two types of sequences: qualitative-quantitative (for the elaboration of the items of the questionnaire; Wiggins et al., 2017), and quantitative-qualitative (to confirm the results; Henderson & Greene, 2014). In our study, we used the second approach to explore students’ perception of the elements involved in engagement and thus reinforce and delimit as precisely as possible the dimensions of the questionnaire.

The aim of using the quantitative method was to measure the multidimensional phenomenon with precision and objectivity, while the qualitative method was designed to learn more about the subjective student’s perception of engagement. As already noted, the two methodologies were used sequentially: the first phase involved employing the quantitative method to validate the questionnaire and verify to what extent the dimensions and engagement factors proposed in the literature could be confirmed. In the second phase, nominal groups were used to investigate which elements students consider to be involved in engagement and the extent to which they correspond with the items included in the questionnaire.

Quantitative method

Questionnaire

The questionnaire design was based on an analysis of the literature on engagement in university contexts (Schaufeli et al., 2002; Ahlfeldt et al., 2005; Ouimet & Smallwood, 2005; Appleton et al., 2006; Krause & Coates, 2008; Seppälä et al., 2008). Initially, it consisted of a list of items related to the three dimensions indicative of engagement (cognitive, emotional and behavioural), plus a group of facilitators (the influence of the professor and classmates and the resources students are provided with for their learning). The final items (questions) were then drafted, taking into account that the scale must evaluate different methodologies used by professors.

The questions were designed following the usual criteria: that they were formulated correctly, that they obtained relevant data and did not influence students’ responses (Converse & Presser, 1986), that the answers provided were sincere (Tourangeau & Smith, 1996; Tourangeau & Yan, 2007) and that unanswered questions would be treated correctly (Seppälä et al., 2008; Groves & Couper, 1998).

To ensure the items’ understandability and readability for university students, the next step involved administering the questionnaire to a group of secondary school students. Once this had been confirmed, an initial pilot study was conducted on 82 randomly selected students attending different university courses.

In addition to the questionnaire items, the pilot study included a series of open-ended questions aimed at detecting any aspects that might be lacking clarity and need to be changed in future versions of the instrument. These were: 1. Do you understand the aim of the questionnaire?; 2. Do you feel comfortable answering the questions?; 3. Are there any questions you do not understand? Which? What aspect(s) cause confusion?; 4. Do any of the questions require too much thought before answering? Which?; 5. Are there any questions you would rather not answer? Which?; 6. Do you think there are any questions that should have been added? Which?; and 7. Do you think the questionnaire is too long?

The students examined the instrument in separate groups on each campus and provided feedback on each item’s clarity, understandability, relevance and appropriateness to the teaching context. In general terms, the students understood the aim of the questionnaire and felt comfortable answering the questions. The majority of respondents also did not consider the questionnaire to be too long. The only question that generated problems was Question 20 (The word “persevere” in the first version was changed to “persist” in the final version). In addition to this, four further questions were added relating to digital technologies and professor relations based on the comments made by the surveyed students. The final version of the scale therefore consisted of 31 items (see Table 1), each of which invited four possible responses (1: strongly disagree, 2: disagree, 3: agree, and 4: strongly agree). This meant that it was possible to quantify the responses obtained.

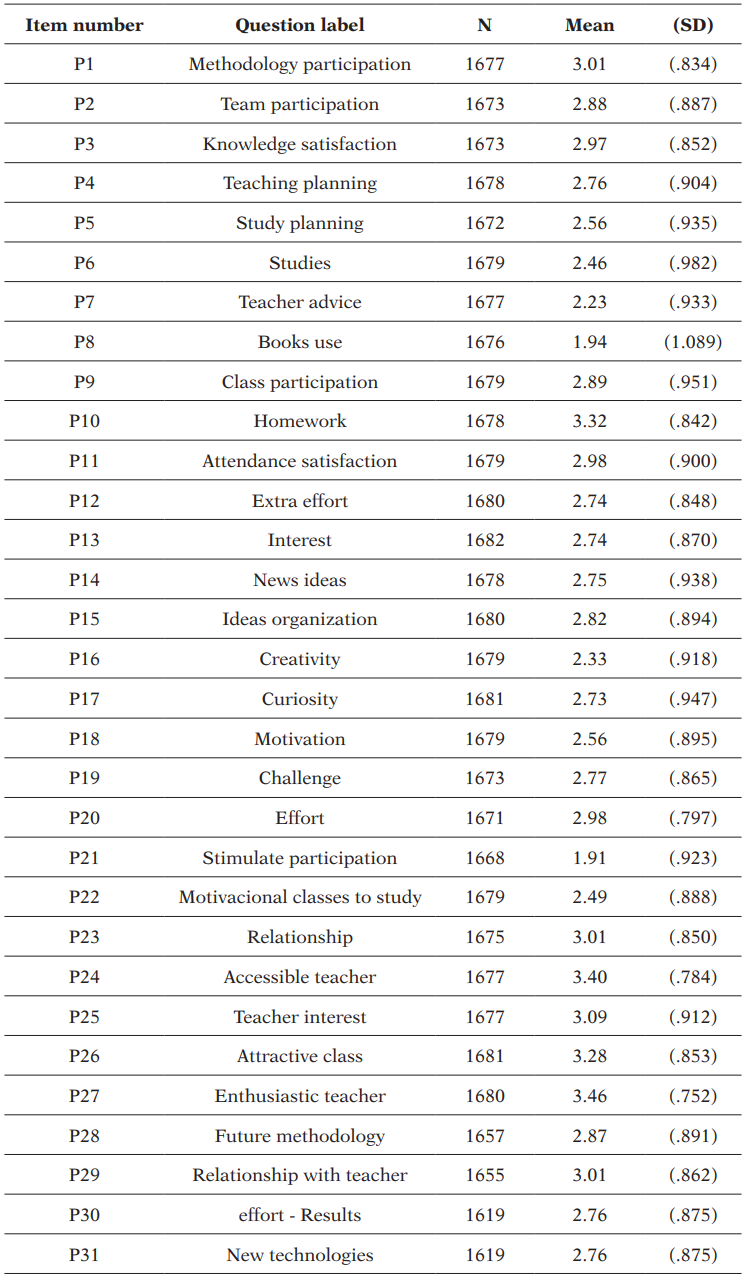

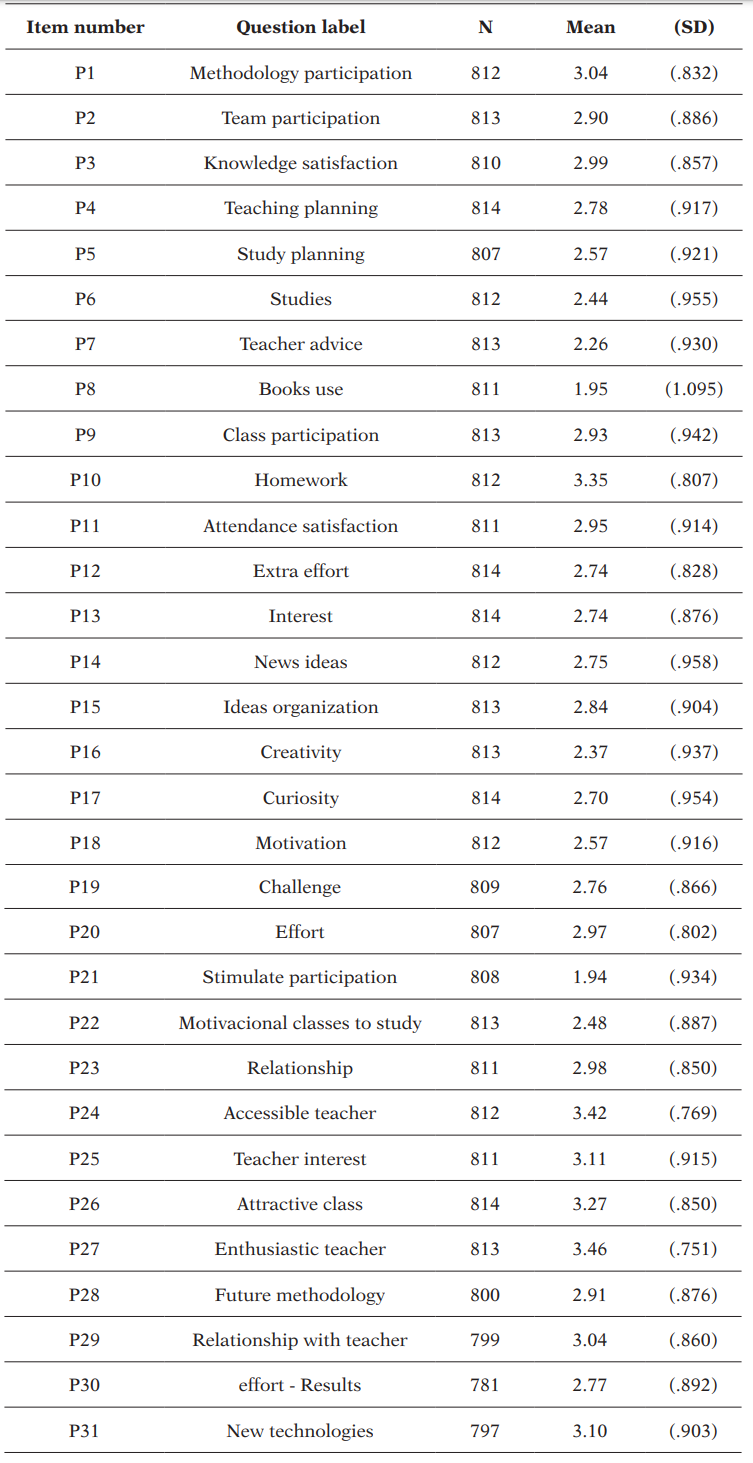

Descriptive statistics of the total cases

Data collection took place over a period of approximately three years and included a total of 1,783 returned questionnaires, 101 of which were invalidated due to being incomplete. The participating students were attending 20 courses being held in seven faculties at the University of Girona (UdG). A multivariate statistical analysis was conducted on the obtained data, adopting a structural equations approach (factor analysis).

Structural equations: Factor Analysis

The factor analysis determined the structural equations governing the relationships between the data obtained, identifying different groups of variables (items) that correlate strongly with one another but not with the other groups (Montoya, 2007). The groupings correspond to non-measurable concepts, which will henceforth be referred to as latent components or factors. The procedure was developed in two stages using the following software: SPSS (version 25), AMOS (version 22), and Mplus7 to evaluate the fit of the proposed model. The first stage was exploratory (Exploratory Factor Analysis, EFA), while the second was confirmatory (Confirmatory Factor Analysis, CFA).

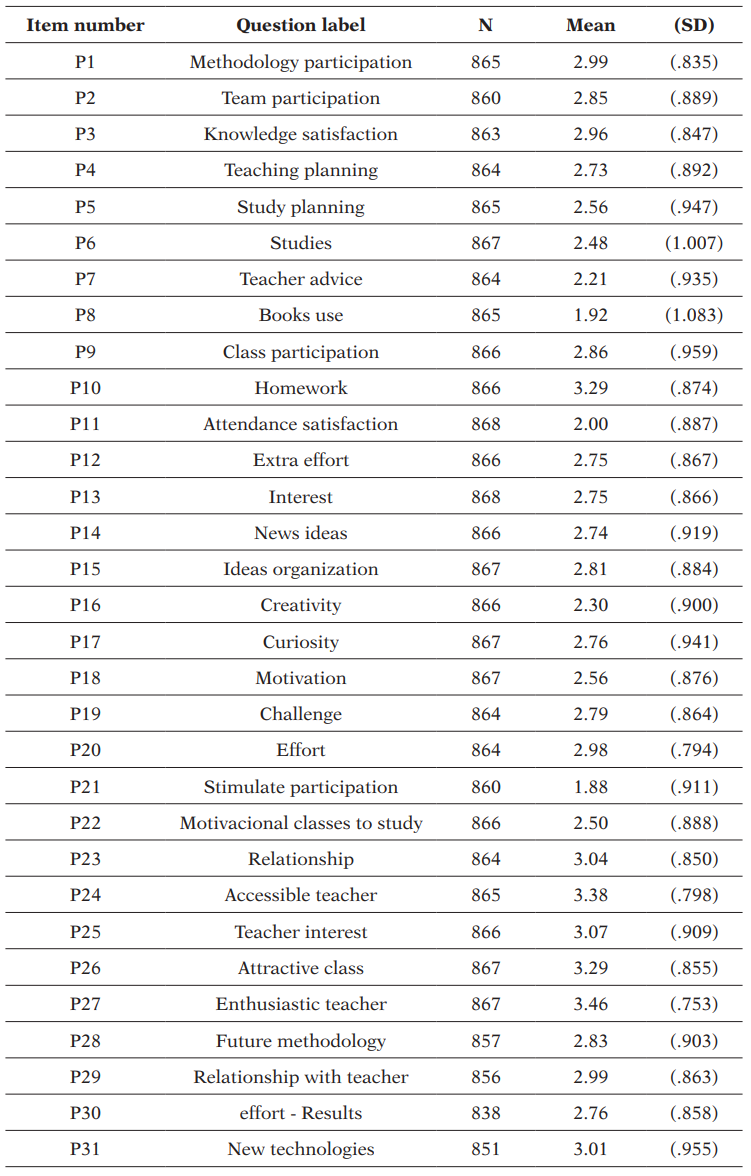

Descriptive statistics of the cases that composed the random sample used in the EFA

The EFA was performed on 920 cases (questionnaires with responses) randomly selected by the program (Table 2). The initial data matrix and correlations between the variables were compiled based on all of the values obtained for these cases. As a first step, the sample size and relationships between variables were evaluated in order to determine whether a particular set of data was appropriate for the factor analysis. The adequacy of sampling or the appropriateness of the sample was evaluated using the Kaiser Meyer Olkin test (with a result of .945) and the strength of the relationships between variables was assessed through Bartlett’s test of sphericity (with Approx. Chi-Square 10628.312; df 378; Sig .000). The next step consisted of factor extraction through the Analysis of principal components. Therefore, the rotation of factors defined the number that could be used to best signify the whole of the relationship between variables. The latter was performed with the Kaiser criterion following Varimax normalization.

The model was evaluated (CFA) by randomly selecting a total of 863 cases. A check was performed to verify whether the model could be estimated (Batista-Foguet, Coenders & Alonso, 2004) by identifying five latent factors with three or more associated variables (items). Those variables that did not fit the model were eliminated. Goodness of fit was verified using the χ2 statistic, the mean square error of approximation (RMSEA), and the Tucker-Lewis (TLI) and comparative fit (CFI) indices (Pérez-Gil et al., 2000). Model reliability was evaluated using standardised factor loadings and error variances. Following this, we proceeded to determine the correlations between the latent factors and the explained variance (R2) for the values of the variables.

Qualitative method

Nominal groups

The second part of the study consisted in conducting nominal groups. The aim here was to use this more open and non-directive method to verify whether the engagement factors included in the questionnaire coincided with those identified by students.

The nominal group method was devised by Delbecq and Van de Ven in 1971 and allows all members of a group to participate in a discussion and reach a consensus.

The procedure involves five stages: start, idea generation, exchange, evaluation and closure. In the first stage, the facilitator explains the aims and the topic to focus on. When generating ideas, each participant must write them down individually.

Subsequently, the moderator asks participants to share one idea each until all ideas have been presented. At the end of this stage, the group is asked whether the ideas are clear; if there are any doubts, and the appropriate clarifications are given.

In the evaluation phase, the ideas are sorted alphabetically so that each participant can rank them in order of importance. The overall scores for each idea are then obtained. The results are discussed in the final phase (optional).

In the present study, the members of the research team defined the aim and target question in their own nominal group. Subsequently, a pilot test was organised with a group of students to assess the suitability of the question.

Finally, six nominal groups were set up. They each comprised students with a minimum of one completed university course at the following University of Girona schools and faculties: The Polytechnic School, the Faculty of Economic and Business Sciences, the Faculty of Law, the Faculty of Sciences, the Faculty of Medicine and the Faculty of Education and Psychology. The groups shared a joint facilitator and two assistants.

Mixed analysis: data triangulation

The analysis consisted of a triangulation of data, associating the most valued ideas on student engagement with the different factors included in the questionnaire.

RESULTS

Quantitative results

The internal consistency of the 31 item questionnaire on student engagement was determined by calculating Cronbach’s alpha coefficient for a complete instrument. The coefficient was .924. Thus, the overall coefficient of the instrument was found to be satisfactory for its use.

Exploratory factor analysis

Table 1 shows the descriptive statistics for the sample of cases selected for EFA, and Table 2 shows the descriptive statistics for the initial EFA data matrix.

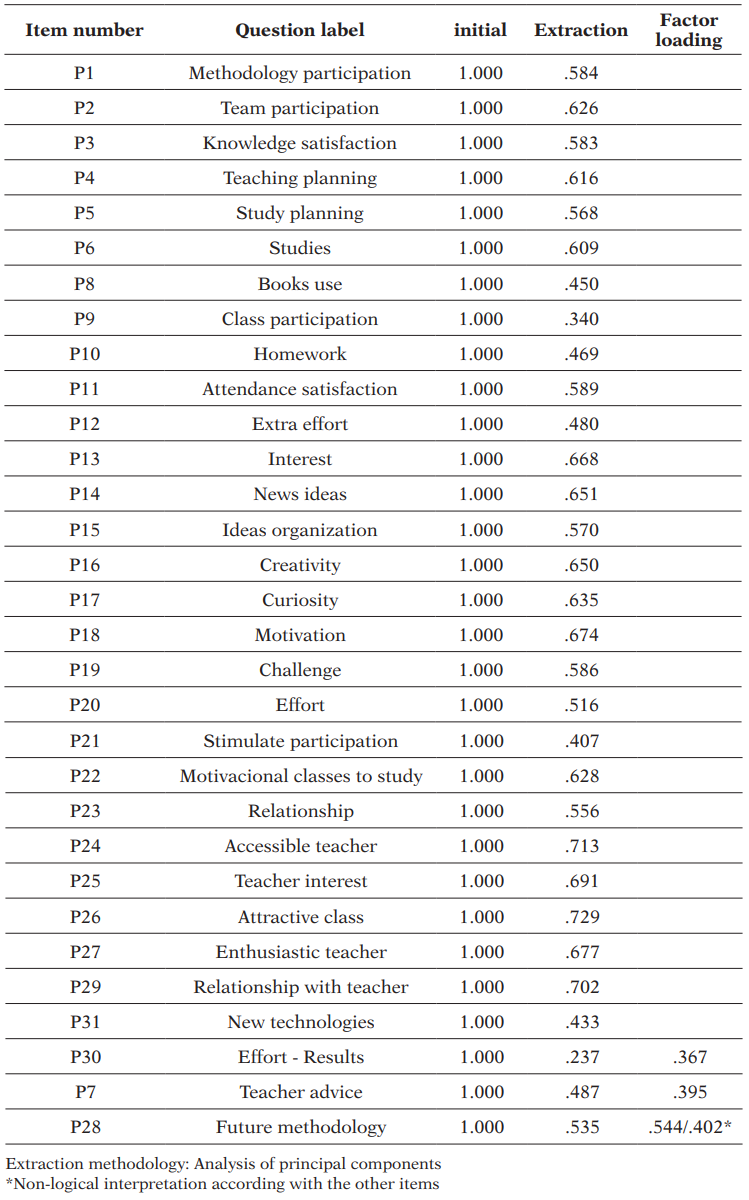

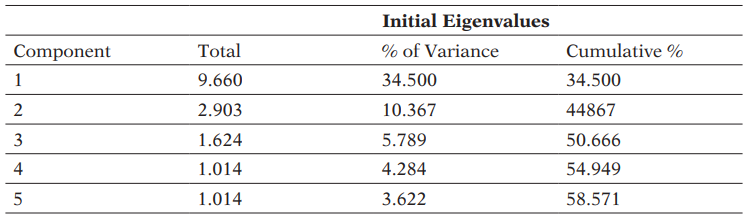

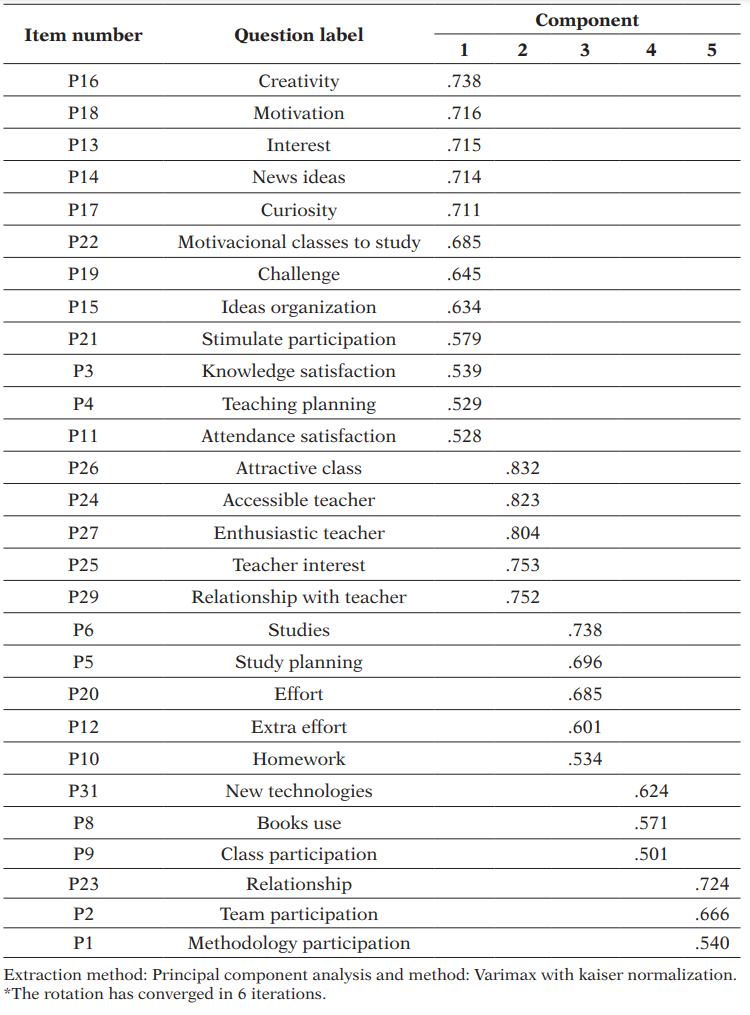

The KMO index had a value of .945, which indicated a good suitability of the sample for the factor analysis, while Bartlett’s sphericity test (χ^2 = 10628.312) had a reliability level of .000, demonstrating non-acceptance of the null hypothesis. The communality values obtained for the variables corresponding to items P30, P7 and P28 (Table 3) indicated a low reproducibility of their original variability, meaning they could be excluded from the analysis.

EFA: Values of the communalities

The initial eigenvalues (Table 4) indicated the extraction of five factors that would explain 58.57 % of the variance. Finally, the rotated component matrix ( Table 5) showed that the variables P16, P18, P13, P14, P17, P22, P19, P15, P21, P3, P4, and P11 displayed correlations (with values ranging from .738 to .528) with Factor 1 (motivation, which also included questions on emotion and cognition); variables P26, P24, P27, P25, and P29 (from .832 to .752) with Factor 2 (professor); variables P6, P5, P20, P12, and P10 (from .738 to .534) with Factor 3 (behaviour); variables P31, P8, and P9 (from .624 to .501) with Factor 4 (resources); and variables P23, P2 and P1 (from .724 to .540) with Factor 5 (relationship).

EFA: Total variance explained

EFA: Rotated components matrix

Confirmatory Factor Analysis

Table 6 shows the descriptive statistics for the sample of cases selected for CFA.

Descriptive statistics of the cases that composed the random sample used in the CFA

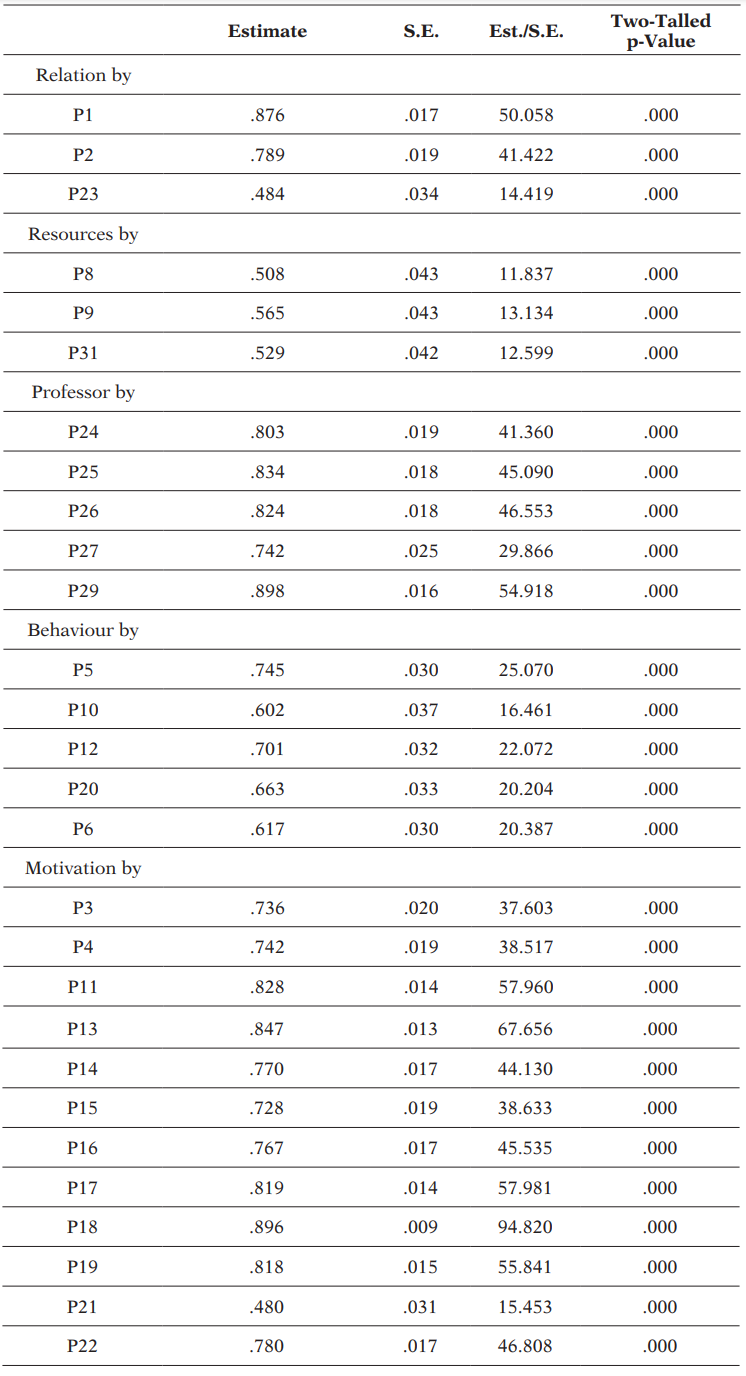

Identifying the latent factors in the model (EFA components) suggested the elimination of three variables (P30, P7 and P28). The association obtained for the other variables of the model and the latent factors is shown in Table 7. Regarding the results obtained for the model’s goodness and degree of fit, the ratio between χ2 and the number of degrees of freedom was 4.27 (p=.0000). It should be noted that the high number of cases in the sample may have favoured this value ( LaNasa et al., 2009). We therefore calculated the goodness of fit indices independently of the sample size using CFI and TLI. The following values were obtained: CFI = .958, TLI = .953, both indicating an excellent model fit. The RMSEA value remained slightly above .05 (.063), which was also indicative of an acceptable fit (90 % CI: .060, .067).

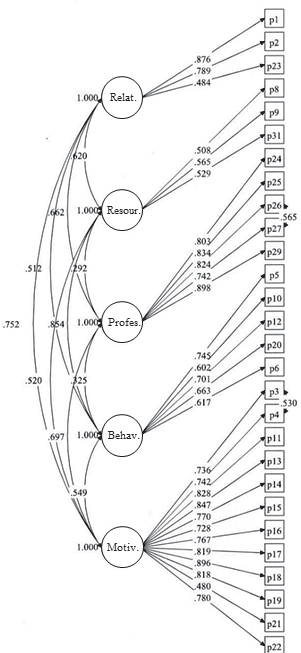

Table 7 shows the correlations between the variables and the latent factors. As in the EFA, five latent factors can be distinguished: two dimensions (emotional-cognitive and behavioural) and three facilitators (relationships, resources and professor).

Relationships of the variables with the latent factors

The relationships of the variables with respect to their corresponding latent factors (Wang et al., 2017) displayed critical proportion values of between 11.837 (for the variable P8 in relation to Resources) and 94.820 (for the variable P18 in relation to Motivation), with values in all of the considerations addressed. Correlations were found between the variable P27 and P26 and P3 and P4. Latent factors showed strong correlations between Resources and Behaviour (.854), and between Resources and Relationship (.620); between Relationship and Motivation (.752), and between Relationship and Professor (.662); between Professor and Motivation (.697); and between Behaviour and Motivation (.549). The explained variance values (R2) were significant in relation to the variables considered for the model.

Figure 2

The relationships between the variables and the latent factors

Qualitative results

The following aims were established for the research team’s nominal group: to determine students’ ideas and conceptions about their engagement in subjects and identify which of these generate most consensus. In addition, the following question was asked: What are the reasons for you engaging in some subjects and not in others?

The pilot test with students revealed two difficulties associated with the question. Firstly, that it was formulated in both positive and negative terms. And secondly, students confusing the concept “engagement” with obligation (in the original language of the study). Consequently, it was rephrased: What makes you more interested in some subjects? Two further problematic aspects were identified in the procedure: the need to limit the number of participants (approximately 8 students) and the time allowed for generating individual ideas (5 minutes).

Results of the mixed analysis

Triangulating the data allowed us to identify the extent to which the ideas with the greatest consensus generated by the students in the nominal group corresponded with the factors obtained from the quantitative analysis of the questionnaire.

The five ideas that generated the greatest consensus in each group were selected based on the contributions of the nominal groups. These were subsequently related to the five factors obtained through the confirmatory analysis. This association revealed three facilitators: professors and social relations were strongly represented, and resources to a lesser extent. Finally, one of the dimensions, motivation, which includes emotion and cognition, also appeared. The only dimension not represented was that of behaviour, since it did not correspond to the nominal group’s question.

Regarding the facilitator professor, which was the most mentioned, contributions related to the following aspects emerged, including:

- the relationship with students: “Professors should foster at least some proximity with their students”, “Professor-student interaction: close, interactive and concerned that we understand”.

- the attitude towards interaction and transmitting trust: “The attitude of the professor, prepared to be receptive to the group, close”, “The attitude of the professor, who transmits trust, a positive attitude, enthusiasm”.

- an interest in education: “That the teaching staff are interested in education, that they have an interest in transferring their knowledge”, “That ABP teachers have an interest in the methodology and are competent at managing groups, engaging, organising, and know how the ABP works”, “Professors who are motivated to teach their subject (who believe in it)”, “Motivated professors (you can see they like to teach, like what they are teaching and don’t look like they’re doing it out of obligation)”.

- experience: “The professor’s experience”.

- planning: “Good planning of content”.

The facilitator related to the relationship is manifested through the following ideas expressed by the students: “The atmosphere in the classroom; when you feel more comfortable with the professor and the students get more involved”, “That there is a feeling of camaraderie in the group and we enjoy it”.

In relation to resources, the questionnaire asked students about which of their own resources they use (books, ICTs). Resources offered to them by the environment appeared in the responses given by the nominal groups: “The organisation of the module, coordination and well-defined objectives”, “That there is a correlation between the subject content and the time spent on it”. Finally, a key element regarding resources is participation: “I prefer the class to be practical and dynamic”, “The dynamics of the subject, the approach adopted by the professor, that there is participation and not only lecturing”. That you feel confident to ask questions and participate in the class”.

The dimensions related to motivation, emotion and cognition were reflected in the following ideas, among others: “That the content can be applied in practice”, “Pragmatism and functionality in the subjects, learning things that we will later use in professional life”, “I like to feel that I’m learning”, “Promoting lateral thinking, understood as the tool that allows theoretical knowledge to be transferred to practice, to reality. As well as teaching knowledge, teaching competences”.

COMMENTS AND DISCUSSION

The aims of the work presented here were to meet the need for valid and reliable measurements of university students’ academic engagement and to analyse their multidimensional and transactional nature. According to Skinner and Pitzer (2012), engagement can be studied on three levels: in relation to the institution, the class group and the activities undertaken. These authors stated that the level of analysis chosen to study the concept must be consistent so that it impacts its operationalisation and the results of its assessment. In this research, we have focused on the latter two levels, our aim being to create an instrument that can be used to assess activities and teaching methodologies employed by professors in the classroom and redirect them towards greater student engagement. In order to obtain more in-depth knowledge of this concept, data deriving from a quantitative analysis of the results of a questionnaire were triangulated with the qualitative analysis of responses obtained from nominal groups of students attending different faculties at the University of Girona.

Statistical tests yielded high reliability and validity scores for the questionnaire employed in the study and it is therefore presented as an instrument that may be useful for professors in assessing and refining their teaching activities. Our exploratory analysis of the responses given by students suggested the existence of two dimensions (emotional-cognitive and behavioural) and three facilitators (teacher, resources and peer relationship). In addition, according to this analysis, the model fits better if items P7, P28 and P30 are discarded (see table 3). Once these items were excluded from the original questionnaire, the confirmatory analysis demonstrated its validity.

One aspect worth noting is that although our initial theoretical model considered three dimensions - cognitive, emotional and behavioural (Shernoff, 2013; Appleton et al., 2008; Fredricks et al., 2004) - the CFA did not discriminate between the emotional and cognitive dimensions, considering them as one (see Table 5, Table 7 and Figure 2). These findings are in accordance with authors such as Reschly et al. (2008) who propose psychological and behavioural components. Ben-Eliyahu et al. (2018) also reported two dimensions, being in this case the cognitive and emotional, including the behavioural into the former. An explanation for our results could be that it is difficult for the student to distinguish cognition from emotion because they are interdependent (Storbeck & Clore, 2007) and dynamically interacting in a non-linear relationship (Phelps, 2006). Other possible explanations could be related to the items of the questionnaire. Many items regarding the cognitive-emotional dimension refer to motivational aspects or to the satisfaction felt by students. However, only two are specific to the cognitive type (number 14, which refers to the ability to analyse, and number 15, summarising and organising ideas). Thus, there were not enough items to allow the cognitive dimension to be considered separately from the emotional one.

Regarding the facilitators, many studies in the literature have focused on identifying the classroom factors associated with student engagement as their research objective. However, most of these have centred around only one facilitator (the professor, the classmates or the atmosphere). Our research has analysed them together in three different groups. In this sense, the results of this study confirm the presence of three latent variables that facilitate engagement: the professor, social relationships and resources used in class (which may be objective, such as books and new technologies, or perceptive, such as the possibility of participating in class, a consideration clearly expressed in the nominal groups). In addition, the transactional character of the dimensions is also observed through the cognitive, emotional or motivational effect generated by the activities carried out in class.

Our study has a few limitations. First, the questionnaire does not analyse in depth the influence of new technologies on student engagement. The rapid increase in the introduction of new technologies in university teaching is recognised, especially since the impact of the Covid19 pandemic. Therefore, more research is needed related to online teaching (e.g. studies show that engagement decreases in online teaching compared to face-to-face teaching; Farrell & Brunton, 2020) but also related to the use of social networks and digital ecosystems to provide opportunities to increase engagement (Ansari & Khan, 2020). Future research should investigate this field which, due to its complexity, deserves a research framework in itself (Bond et al., 2020).

Our research is a first step in a larger project that aims to assess engagement with different methodologies used in university classrooms. It is clear that the study of engagement is not limited to this framework. This field opens up many possibilities to explore such as engagement when using methodologies that transcend classrooms, such as internships in vocational centres and companies ( Nepal & Rogerson, 2020). In this field, one very interesting topic is civic engagement (Theiss-Morse & Hibbing, 2005), in other words the involvement of students in politics, non-profit associations, the promotion of democratic values, the fight against climate change or the 2030 agenda for sustainable development, for example ( Brugmann et al., 2019).

These limitations of our study open up new fields for future research. In this sense, a possibility could be to investigate whether the questionnaire and the proposed qualitative method allow for identification and analysis of different types of engagement in relation to the characteristics of students (Mu & Cole, 2019), of institutions (Pike & Kuh, 2005) or, as noted above, of different teaching methodologies applied in the classroom (e.g. Flipped class, Problem Based Learning).

CONCLUSIONS

The methods employed to assess engagement in this research provide for a statistically valid and useful instrument aimed at determining students’ perceptions of the engagement generated in university classrooms. In addition, the questionnaire identifies the internal and individual factors of engagement (dimensions) and those that are transactional with the context (facilitators). The nominal groups were more suited to identifying the facilitators, as these are more observable - and consequently more recognizable - by students.

The mixed methodology revealed improvements that should be incorporated in the questionnaire. The confirmatory analysis showed the need to incorporate more items related to the cognitive dimension (attention, concentration, individual planning, memory), while the qualitative methodology revealed the need to incorporate items related to resources in the environment (planning and coordination between professors, coherent and explicit objectives). The need of these environmental resources is in accordance with the results of the research of Deslauriers et al. (2018). The authors show that students like classes that facilitate cognitive fluency even if they learn less than in classes with superior pedagogical methodologies. In this sense, taking environmental sources into account is an important element in addressing student resistance and preparing them for more effective methodologies but which, because of the effort required, can have detrimental effects on student engagement. In fact, besides being a research instrument, the methodology of nominal groups proved to be a useful, quick and effective means of getting student perspectives on the facilitators of class engagement and to prepare and coach them for methodologies that require greater participation.

REFERENCES

Ahlfeldt, S., Mehta, S., & Sellnow, T. (2005). Measurement and analysis of student engagement in university classes where varying levels of PBL methods of instruction are in use. Higher Education Research & Development, 24(1), 5-20. https://doi.org/10.1080/0729436052000318541

Altbach, P.G., Reisberg, L., & Rumbley, L.E. (2009). Trends in Global Higher Education: Tracking an Academic Revolution. UNESCO 2009 World Conference on Higher Education, Paris, 5-8 July 2009.

Ansari, J.A.N., & Khan, N.A. (2020) Exploring the role of social media in collaborative learning the new domain of learning. Smart Learn. Environ, 7(9). https://doi.org/10.1186/s40561-020-00118-7

Appleton, J.J., Christenson, S.L., & Furlong, M.J. (2008). Student engagement with school: Critical conceptual and methodological issues of the construct. Psychology in the Schools, 45(5), 369-386. https://doi.org/10.1002/pits.20303

Appleton, J.J., Christenson, S.L., Kim, D., & Reschly, A.L. (2006). Measuring cognitive and psychological engagement: Validation of the Student Engagement Instrument. Journal of School Psychology, 44(5), 419-431. https://doi.org/10.1016/j.jsp.2006.04.002

Archambault, I., Janosz, M., Fallu, J.S., & Pagani, L.S. (2009). Student engagement and its relationship with early high school dropout. Journal of Adolescence, 32(3), 651-670. https://doi.org/10.1016/j.adolescence.2008.06.007

Barkley, E.F. (2010). Student engagement techniques: A handbook for college faculty. Jossey-Bass.

Batista-Foguet, J.M., Coenders, G., & Alonso, J. (2004). Análisis factorial confirmatorio. Su utilidad en la validación de cuestionarios relacionados con la salud. Medicina Clínica, 122(S1), 21-7.

Ben-Eliyahu, A., Moore, D., Dorph, R., & Schunn, C.D. (2018) Investigating the multidimensionality of engagement: Affective, behavioral, and cognitive engagement across science activities and contexts. Contemporary Educational Psychology, 53, 87-105. https://doi.org/10.1016/j.cedpsych.2018.01.002

Bond, M., Buntins, K., Bedenlier, S., Zawacki-Richter, O., & Kerres, M. (2020). Mapping research in student engagement and educational technology in higher education: a systematic evidence map. International Journal of Educational Technology in Higher Education, 17(2). https://doi.org/10.1186/s41239-019-0176-8

Brugmann, R., Côté, N., Postma, N., Shaw E.A., Pal, D., & Robinson, J.B. (2019). Expanding student engagement in sustainability: Using SDG- and CEL- Focused inventories to transform curriculum at the University of Toronto. Sustainability, 11(2), 530. https://doi.org/10.3390/su11020530

Capella, E., Kim, H.Y., Neal, J.W., & Jackson, D.R. (2013). Classroom peer relationships and behavioral engagement in elementary school: The role of social network equity. American Journal of Community Psychology, 52(3-4), 367-379. https://doi.org/10.1007/s10464-013-9603-5

Carpio, L., Momplet, V., Plasencia, M., San Gabino, Y., Canto, M., & Pérez, O. (2016). Estrategia metodológica para incrementar las investigaciones sobre Medicina Natural y Tradicional en Villa Clara. Revista Educación Médica del Centro, 8(S1), 31-45.

Collie, R.J., Holliman, A.J., & Martin, A.J. (2017). Adaptability, engagement and academic achievement at university. Educational Psychology, 37(5), 632-647. https://doi.org/10.1080/01443410.2016.1 231296

Converse, J.M., & Presser, S. (1986) Survey Questions: Handcrafting the Standardized Questionnaire. Sage Pubblication.

Christenson, S.L., Reschly, A.L., & Wylie, C. (Eds.). (2012). Handbook of Research on Student Engagement. Springer. https://psycnet.apa.org/doi/10.1007/978-1-4614-2018-7

Delbecq, A.L., & Van de Ven, A.H. (1971). A group process model for problem, identification and program planning. The Journal of Applied Behavioral Sciences, 7(4), 466-492. https://doi.org/10.1177%2F002188637100700404

Deslauriers, L., McCarty, L.S., Miller, K., Callaghan, K., & Kestin, G. (2018). Measuring actual learning versus feeling of learning in response to being actively engaged in the classroom. Proceedings of the National Academy of Sciences, 116(39), 19251-19257. https://doi.org/10.1073/pnas.1821936116

Duers, L.E. (2017). The learner as co-creator: A new peer review and self-assessment feedback form created by student nurses. Nurse Education Today, 58, 47–52. https://doi.org/10.1016/j.nedt.2017.08.002

Farrell, O., & Brunton, J. (2020). A balancing act: a window into on line student engagement experiences. International Journal of Educational Technology in Higher Education, 17, 1-19. https://doi.org/10.1186/s41239-020-00199-x

Fernández-Zabala, A., Goñi, E., Camino, I., & Zulaika, L.M. (2016). Family and school context in school engagement. European Journal of Psychology of Education, 9(2), 47-55. https://psycnet.apa.org/doi/10.1016/j.ejeps.2015.09.001

Finn, J.D. (1989). Withdrawing from School. Review of Educational Research, 59(2), 117-142. https://doi.org/10.3102/00346543059002117

Fredricks, J.A., Blumenfeld, P.C., & Paris, A.H. (2004). School Engagement: Potential of the Concept, State of the Evidence. Review of Educational Research, 74(1), 59-109. https://doi.org/10.3102/00346543074001059

Frederiks, J.A., Reschly, A., & Christenson, S. L. (2019). Handbook of Students Engagement intervention: Working with Disengaged Students. Elsevier.

Groves R.M., & Couper, M.P. (1998). Nonresponse in Household Interview Surveys. John Wiley & Sons.

Hammersley, M. (2007). Media bias in reporting social research?: the case of reviewing ethnic inequalities in education. Routlegde.

Henderson, D.X., & Greene, J. (2014). Using mixed methods to explore resilience, social connectedness, and re-suspension among youth in community-based alternative-to-suspension program. International Journal of Child, Youth and Family Studies, 5(3), 433-446. https://doi.org/10.18357/ijcyfs.hendersondx.532014

Kahu, E.R. (2013). Framing Student Engagement in Higher Education. Studies in Higher Education, 38(5), 758-773. https://doi.org/10.1080/03075079.2011.598505

Krause, K.R., & Coates, H. (2008). Students’ engagement in first‐year university. Assessment & Evaluation in Higher Education, 33(5), 493-505. https://doi.org/10.1080/02602930701698892

Lam, S.F., Jimerson, S., Shin, H., Cefai, C., Veiga, F.H., Hatzichristou, C., Polychroni, F. Kikas, E., Wong, BPH., Stanculescu, E., Basnett, J., Duck, R., Farrell, P., Liu, Y., Negovan, V., Nelson, B., Yang, H., & Zollneritsch, J. (2016). Cultural universality and specificity of student engagement in school: The results of an international study from 12 countries. British Journal of Educational Psychology, 86, 137-153. https://doi.org/10.1111/bjep.12079

LaNasa S.M., Cabrera, A.F., & Trangsrud, H. (2009). The construct validity of student engagement: A confirmatory factor analysis approach. Research Higher Education, 50, 315-332. https://doi.org/10.1007/s11162-009-9123-1

Lee, J., & Shute, VJ. (2010). Personal and Social-Contextual Factors in K–12 Academic Performance: An Integrative Perspective on Student Learning. Educational Psychologist, 45(3), 185-202. https://doi.org/10.1080/00461520.2010.493471

Macfarlane, B., & Tomlinson, M. (2017). Critiques of student engagement. Higher Education Policy, 30(1), 5-21. https://doi.org/10.1057/s41307-016-0027-3

Mandernach, B.J. (2015). Assessment of Student Engagement in Higher Education: A Synthesis of Literature and Assessment Tools. International Journal of Learning, Teaching and Educational Research, 12(2), 1-14.

Martin, A.J., Mansour, M., & Malmberg, L-E. (2020) What factors influence students’ real-time motivation and engagement? An experience sampling study of high school students using mobile technology, Educational Psychology, 40(9). 1113-1135. https://psycnet.apa.org/doi/10.1080/01443410.2018.1545997

McMahon, B., & Portinelli, J. (2004). Engagement for what? Beyond popular discourses of student engagement. Leadership and Policy in Schools, 3(1) 59-76.

Montoya, O. (2007). Aplicación del análisis factorial a la investigación de mercados. Caso de estudio. Scientia et Technica, 1(35), 281-286. https://doi.org/10.22517/23447214.5443

Mu, L., & Cole, J. (2019) B Behavior-based student typology: A view from student transition from high school to college. Research in Higher Education, 60(8), 1171-1194. https://doi.org/10.1007/S11162-019-09547-X

Nepal, R., & Rogerson, A. M. (2020). From theory to practice of promoting student engagement in business and law-related disciplines: The case of undergraduate economics education. Education Sciences, 10(8), 205. https://doi.org/10.3390/educsci10080205

Newmann, F., Wehlage, G. G., & Lamborn, S. D. (1992). The significance and sources of student engagement. In F. Newmann (Ed.), Student engagement and achievement in American secondary schools (pp. 11–39). Teachers College Press.

Ouimet, J. A., & Smallwood, R. A. (2005). Assessment Measures: CLASSE-- The Class-Level Survey of Student Engagement. Assessment Update, 17(6), 13-15.

Pérez-Gil, J.A., Chacón, S., & Moreno, R., (2000). Validez de constructo: el uso de análisis factorial exploratorio-confirmatorio para obtener evidencias de validez. Psicothema, 12(2), 442-446.

Pike, G. R., & Kuh, G. D. (2005). A typology of student engagement for American Colleges and Universities. Research in Higher Education, 46(2), 185-209. https://doi.org/10.1007/s11162-004-1599-0

Phelps, E. (2004). The human amygdala and awareness: Interactions between emotion and cognition. In M.S. Gazzaniga (Ed.), The cognitive neurosciences (p. 1005-1015). MIT Press.

Phillips, D.C. (2009). A Quixotix Quest? Philosophical Issues in Assessing the Quality of Educational Research. In P. B. Walter, A. Lareau, S. H. & Ranis (2009). Education Research on Trial: Policy Reform and the Call for Scientific Rigor. Routhledge.

Reschly, A. L., Huebner, E. S., Appleton, J. J., & Antaramian, S. (2008). Engagement as flourishing: The contribution of positive emotions and coping to adolescents’ engagement at school and with learning. Psychology in the Schools, 45(5), 427-445. https://doi.org/10.1002/pits.20306

Rodríguez-Fernández, A., Ramos-Díaz, E., Ros, I., & Zuazagoitia, A. (2018). Implicación escolar de estudiantes de secundaria: La influencia de la resiliencia, el autoconcepto y el apoyo social percibido. Educación XX1, 21(1). 87-108, https://doi.org/10.5944/educxx1.20177

Schaufeli, W.B., Martínez, I., Marqués-Pinto, A., Salanova, M., & Bakker, A. (2002). Burnout and engagement in university students: A cross-national study. Journal of Cross-Cultural Studies, 33, 464-481. https://doi.org/10.1177%2F0022022102033005003

Schnitzler, K., Holzberger, D., & Seidel, T. (2020) Connecting Judgment Process and Accuracy of Student Teachers: Differences in Observation and Student Engagement Cues to Assess Student Characteristics. Frontiers in Education, 10, 1-28. https://doi.org/10.3389/feduc.2020.602470

Schlechty, P.C. (2002) Working on the Work: An Action Plan for Teachers, Principals, and Superintendents. Jossey-Bass.

Seppälä, P., Mauno, S., Feldt, T., Hakanen, J., Kinnunen, U., Tolvanen, A., & Schaufeli, W. (2008). The Construct validity of the Utrecht Work Engagement Scale: Multisample and Longitudinal Evidence. Journal of Happiness Studies, 10, 459-481. https://doi.org/10.1007/s10902-008-9100-y

Shernoff, D.J. (2013). Optimal learning environments to promote student engagement. Springer.

Shernoff, D.J., Kelly, S., Tonks, S.M., Anderson, B., Cavanagh, R., Sinha, S., & Abdi, B. (2016). Student engagement as a function of environmental complexity in high school classrooms. Learning and Instruction, 43, 42-60. https://doi.org/10.1016/j.learninstruc.2015.12.003

Skinner, E. A., & Pitzer, J. R. (2012). Developmental dynamics of student engagement, coping, and everyday resilience. In S. L. Christenson, A. L. Reschly, & C. Wylie (Eds.), Handbook of research on student engagement (pp. 21– 44). Springer.

Storbeck, J., & Clore, G.L. (2007). On the interdependence of cognition and emotion. Cognition and Emotion, 21(6), 1212-1237. https://doi.org/10.1080/02699930701438020

Theiss-Morse, E., & Hibbing, J.R. (2005) Citizenship and civic engagement. Annual Review of Political Science, 8, 227-249. https://doi.org/10.1146/annurev. polisci.8.082103.104829

Tourangeau, R., & Smith, T.W. (1996). Asking sensitive questions. The impact of data collection mode, questions format and context. Public Opinion Quarterly, 60, 275-304. https://doi.org/10.1086/297751

Tourangeau, R., & Yan, T. (2007) Sensitive questions in surveys. Psychological Bulletin, 133(5), 859-883. https://doi.org/10.1037/0033-2909.133.5.859

Van Uden, J.M., Ritzen, H., & Pieters, J.M. (2014). Engaging students: The role of teacher beliefs and interpersonal teacher behaviour in fostering student engagement in vocational education. Teaching and Teacher Education, 37, 1765-1776. https://doi.org/10.1016/j.tate.2013.08.005

Veiga, F. H., Robu, V., Conboy, J., Ortiz, A., Carvalho, C., & Galvão, D. (2016). Students’ engagement in school and family variables: A literature review. Estudos de Psicologia, 33(2), 187-197.

Wang, J., Hefetz, A., & Liberman, G. (2017). Applying structural equation modelling in educational research. Culture and Education, 29(3), 563-618. https://doi.org/10.1080/11356405.2017.1367907

Wiggins, B.L., Eddy, S.L., Wener-Fligner, L., Freisem, K., Grunspan, D.Z., Theobald, E.J., Timbrook, J., & Crowe, AJ. (2017). ASPECT: A Survey to Assess Student Perspective of Engagement in an Active-Learning Classroom. Life Sciences Education, 16(2), 1-13. https://doi.org/10.1187/cbe.16-08-0244

Zyngier, D. (2007). (Re)conceiving student engagement: What the students say they want. Putting young people at the centre of the conversation. LEARNing Landscapes, 1(1),93-116. https://doi.org/10.36510/learnland.v1i1.240

Zyngier, D. (2008). (Re)conceptualising student engagement: Doing education not doing time. Teaching and Teacher Education, 24(7), 1765-1776. https://doi.org/10.1016/j.tate.2007.09.004

Author notes

E-mail: helena.benito@udg.edu

E-mail: esther.llop@udg.edu

E-mail: marta.verdaguer@udg.edu

E-mail: joaquim.comas@udg.edu

E-mail: ariadna.lleonart@udg.edu

E-mail: orts@xtec.cat

E-mail: anna.amado@udg.edu

E-mail: carles.rostan@udg.edu