Estudios

VALIDATION OF A TOOL FOR SELF-EVALUATING TEACHER DIGITAL COMPETENCE

VALIDACIÓN DE UNA HERRAMIENTA PARA AUTOEVALUAR LA COMPETENCIA DIGITAL DOCENTE

VALIDATION OF A TOOL FOR SELF-EVALUATING TEACHER DIGITAL COMPETENCE

Educación XX1, vol. 24, núm. 1, pp. 353-373, 2021

Universidad Nacional de Educación a Distancia

Esta obra está bajo una Licencia Creative Commons Atribución-NoComercial 4.0 Internacional.

Recepción: 24 Marzo 2020

Aprobación: 27 Mayo 2020

How to reference this article / Cómo referenciar este artículo:

Usart Rodríguez, M.; Lázaro Cantabrana, J.L. & Gisbert Cervera, M. (2021). Validation of a tool for self-evaluating teacher

digital competence. Educación XX1, 24(1), 353-373, http://doi.org/10.5944/educXX1.27080

Usart Rodríguez, M.; Lázaro Cantabrana, J.L. y Gisbert Cervera, M. (2021). Validación de una herramienta para autoevaluar la competencia digital docente. Educación XX1, 24(1),

353-373, http://doi.org/10.5944/educXX1.27080

Abstract: The impressive and rapid development of society is leading to technological innovations that require new technical and cognitive skills. In particular, Teacher Digital Competence (TDC) is becoming increasingly important as an initial step towards better use of Digital Technologies (DT) in the classroom. Nevertheless, to date, future teachers on training programs have no tools to help them self-evaluate their own competence and get instant feedback on how their TDC is developing. This study subjects a TDC self-evaluation tool for initial teacher training – COMDID-A– to dimensional and external validation, in relation to age, gender and access to university. The sample consisted of 144 students on an initial teacher training program at a Catalan university. The results show that the dimensional structure proposed by the theoretical model is highly reliable and has four dimensions: 1. Didactic, curricular and methodological aspects; 2. Planning, organization and management of digital technological resources and spaces; 3. Ethical, legal and security aspects; and 4. Personal and professional development. Furthermore, significant correlations emerge between age and self-evaluation of TDC: in particular, older students self-evaluate themselves as less competent than younger students. No gender differences were observed. The outcomes of this study could provide future teachers and educational institutions with a valid and reliable tool to guide their perception and awareness of TDC development. This tool could be implemented in the formative assessment process of initial teacher training to raise student awareness of this competence and give them tools so that they can make significant use of DT in the classroom.

Keywords: Technological literacy, teacher training, educational technology, student teacher evaluation, self evaluation (Individuals).

Resumen: El rápido desarrollo de nuestra sociedad está conduciendo a nuevas aplicaciones de tecnológicas digitales (TD) que demandan habilidades técnicas y cognitivas que permitan resolver estas nuevas situaciones. En concreto, la competencia digital docente (CDD) es el primer paso hacia el mejor uso de las TD en el aula. Sin embargo, no se han propuesto instrumentos que puedan ayudar a los futuros maestros en formación a autoevaluar su propia competencia y obtener un feedback instantáneo sobre el desarrollo de su propia CDD. Este estudio tiene como objetivo validar una herramienta (COMDID-A) de autoevaluación de CDD para la formación inicial de docentes, en términos de validez dimensional y externa, y en relación con la edad, el género y el acceso a la universidad. La muestra es de 144 estudiantes del grado de educación en una universidad catalana. Los resultados muestran que la estructura dimensional propuesta por el modelo teórico es altamente confiable, con cuatro dimensiones: 1. Aspectos didácticos, curriculares y metodológicos; 2. Planificación, organización y gestión de recursos y espacios tecnológicos digitales; 3. Aspectos éticos, legales y de seguridad; y 4. Desarrollo personal y profesional. Además, se miden correlaciones significativas entre la edad y la CDD, en particular, los estudiantes mayores se autoevalúan a sí mismos como menos competentes que los más jóvenes. No se observaron diferencias de género. Los resultados de este estudio ayudarán a futuros docentes e instituciones educativas, aportando una herramienta válida y confiable para guiarles en el desarrollo de su CDD. COMDID-A se puede implementar como parte de la evaluación formativa, ayudando a los estudiantes a ser conscientes de su nivel de CDD y de los siguientes pasos a realizar en su desarrollo.

Palabras clave: Competencia Digital, formación de profesores, tecnologías digitales, evaluación del profesor en formación, autoevaluación.

INTRODUCTION

Education is the driving force behind the development of all countries and, like society, it been transformed by the digitization process. Digital Technologies (DT) have great potential for transforming training processes (Slavich & Zimbardo, 2012) because they provide learners with new strategies, spaces, models and opportunities to learn (Gisbert & Johnson, 2015). Citizens of the 21st century needs to develop competences so that they can participate in social inclusion, and evolve, both personally and professionally, into a digital society. The list of key competences for learning, one of which is Digital Competence (DC) was compiled by the European Commission (2018b). As well as these key competences, the domain of DT is considered to be a fundamental ability, at the same level as language, reading, writing and mathematics, which should allow citizens to develop basic skills so that they can learn, work and live in society. In this context, the educational system must have teachers who are prepared to respond to these new demands and train their students in these key skills.

The European Commission published DigCompEdu (Redecker & Punie, 2017), which specifies the DC that teachers must have in order to effectively practice their profession. In Spain, INTEF (2017) defines Teacher DC (TDC) as the set of competences that 21st century teachers must develop to improve the efficacy of their educational practice and for their own ongoing professional development. Furthermore, the Government of Catalonia (Generalitat de Catalunya, 2018) states that TDC is “the ability of teachers to apply and transfer all their knowledge, strategies, skills and attitudes about learning and knowledge technologies into real and concrete situations of their professional praxis”. So, teachers should have both instrumental and methodological DC, measured during their daily practice. According to this review, teacher training cannot be reduced to the acquisition of technological skills; it should also focus on how these skills are applied in teaching. The objective of this study is to validate a tool that helps future teachers develop their TDC during formative assessment. For this purpose, TDC is regarded as a complex competence, made up of a set of abilities, capacities and attitudes that teachers must develop if they are to incorporate DT into their professional practice and development (Lázaro et al., 2019), and that we will further discuss during this article.

THEORETICAL FOUNDATION

The importance of developing TDC has been widely discussed in teacher education literature. Sanz-Ponce and colleagues (2015) state that self-perception of TDC should be carefully studied, because teachers should be leading the enormous change that DT represents in the classroom. In particular, initial teacher training should give specific DT training for future teachers, so they can properly implement DT in their professional activity (Papanikolaou et al., 2017). Several international benchmarks present standardization proposals for organizing TDC into the knowledge and skills that teachers need (Generalitat de Catalunya, 2018; INTEF, 2017; Redecker & Punie, 2017; Unesco, 2019). Sang and colleagues (2010) highlight the need for preparation in TDC training from the very beginning of teacher training courses.

The need for training in TDC is associated with the need for evaluating it. Students must be aware of their own level in planning, teaching and evaluating training activities, developing and using teaching resources, promoting quality and up-to-date teaching (Tejada & Ruiz, 2016). In this regard, the inclusion of self-evaluation processes within the formative assessment in initial teacher training is key to helping future teachers become aware of their own level of development in this competence (Cosi et al., 2020). According to Lázaro and Gisbert (2015) and the Generalitat de Catalunya (2018), the first level of TDC development is the competence that novice teachers should have acquired at the end of their initial training. Self-evaluation should not only focus on assessment but also have an educational value. This would allow students to manage their own learning process through reflection and awareness (Fazey & Fazey, 2001; Tejada & Ruiz, 2016). We understand the evaluation process as learning-oriented assessment, in which the feedback provided plays a fundamental role (Cosi et al., 2020).

In addition to identifying the dimensions and indicators of TDC, we need to know the different levels of development of this competence, and to have valid instruments that allow educational institutions to grade this development in terms of learning, and guide future teachers to acquire their competencies through continuous improvement. Evaluating TDC in initial teacher training presents important challenges because of the inner difficulties of evaluating competencies and establishing a framework for assessment. New tools are needed to help students reflect on situations and problems in line with the indicators to be evaluated. In recent years, various TDC evaluation tests have been developed on the basis of standards that make it possible to analyze the TDC level of teachers and future teachers:

- The Wayfind Teacher Assessment (Banister & Reinhart, 2012) measures the use that teachers make of technology. This self-assessment test for teachers is already in use.

- Selfie is a self-assessment tool based on self-perception, and its version for education centers and organizations has been implemented. It applies the European Commission’s DigCompEdu (Redecker & Punie, 2017) as a reference standard. The rubric for the assessment has 6 levels of development ranging from newcomer to pioneer, which matches the model used for classifying linguistic competence. As the European Commission (Redecker & Punie) points out, it is a reference framework that must be contextualized.

- The TDC Portfolio (INTEF, 2017) is an assessment system produced by the Spanish government for teachers based on the Common Framework standard of TDC. Teachers provide information about the assessment indicators. On the basis of this proposal, Tourón and colleagues (2018), developed an online self-assessment questionnaire to determine the respondent’s self-perception.

These are the reference frameworks of the instrument proposed in this paper. COMDID-A (Lázaro & Gisbert, 2015) is a self-assessment tool aligned to the proposal made by the Catalan government (Generalitat de Catalunya, 2018), and to the Spanish and European contexts, as outlined by Lázaro and colleagues (2019).

TOOL DESIGN AND DEVELOPMENT

The design and development process of this instrument has undergone several phases, which are summarized below (Lázaro & Gisbert, 2015; Lázaro et al., 2018):

Phase 1. Review of the scientific literature on which the tool is based:

- TDC dimensions. Dimension 1: Didactic, curricular and methodological aspects. Dimension 2: Planning, organization and management of digital technological spaces and resources. Dimension 3: Relational aspects, ethics and security. Dimension 4. Personal and professional aspects.

- Development levels of the competence: Basic, intermediate, expert and transformational level.

- Evaluation indicators.

Phase 2. Design of the questionnaire items. Based on the indicators and development levels of competence, the questions placed users in professional situations in which they had to self-assess their ability to act. The wording of the questions must be clearly and precisely linked to the indicator in order to facilitate the answer. We consider that self-assessment is part of the self-knowledge and self-reflection that future teachers need to manage their own learning process.

Phase 3. Validation by experts. A total of 10 professionals, including researchers and teachers with a minimum experience of 5 years of work in the field of TDC development, reviewed the content and construction of the tool and contributed to drafting the items until a final version was obtained, which had a total of 22 items, distributed in 4 dimensions.

The present study arises from the problem pointed out by Esteve (2015) that, despite the importance of TDC, students and future teachers do not always reach a sufficient level of DC during the period of teacher training before they enter the professional world. Furthermore, Cabero (2013) concluded that teachers self-perceive their capacity to use DTs in classrooms on the basis of their wider knowledge of DT applications in general. In addition, as we have already mentioned, no appropriate instruments are being used to measure and evaluate the acquisition of TDC during students’ formative assessment process. There is a need for a valid and reliable self-evaluation tool that not only builds on existing frameworks, but which also focuses on particular dimensions and areas that will be constant features of teachers’ daily lives in the classroom requiring DTs to be used naturally.

In order to externally validate the instrument, the variables that need to be studied in relation with the level of TDC were chosen from the literature. First, some authors have related the age of students to self-perception of DC (Fazey & Fazey, 2001; Kumar & Vigil, 2011). In particular, the younger future teachers are, the higher the self-evaluation of their competencies (Esteve, 2015; Hosein et al., 2010) point out that, whilst there may be age-related differences concerning perceptions and experiences of technology-mediated learning, other demographic characteristics, such as gender (Roig et al., 2015) are also important. Furthermore, in their study of in-service teachers, Area and colleagues (2016) concluded that younger teachers with less professional experience make less use of TD in teaching. Although most education students in our study are women, it is important to analyze whether their self-perception of DC is different from that of men, as has been found in samples from other countries (Hargittai & Shafer, 2006). In particular, Björk and colleagues (2018) found that Maltese male teachers self-reported as more confident in the use of DTs than their female counterparts. In summary, to ensure that COMDID-A is consistent, we validated it internally and externally with a sample of students. This was the final step in the process, after the evaluation of experts (Lázaro & Gisbert, 2015).

METHODOLOGY

Objective and Research Questions

The objective of the study is to validate COMDID internally and externally for assessing the TDC of education students.

To achieve this goal, we propose the following research questions:

- What is the factor structure and the internal reliability of COMDID?

- What is the correlation between the students’ self-assessed TDC and the variables of gender and age?

Sample and data collection

The COMDID-A questionnaire was made available in an online beta version with a database in MySQL and PHP. It was distributed to students of three class groups during the second semester of the academic year 2017-18, as part of the formative assessment process. The final sample of students was n=144 and they filled in all the fields of the test. They were all first-year students of the Faculty of Education Sciences at the same university and attended different education degrees: Double degree in Infant Education and Primary Education with a specialty in English, Bachelor’s degree in Infant Education, and Bachelor’s degree in Primary Education. Students answered the questionnaire by accessing their mobile devices from the classroom and were all first-year initial teacher training students from the Universitat Rovira i Virgili. Although not random, the sample is representative of first-year pre-service students in our context, because the gender, age and access distributions of the respondents matched the national distribution of first-year student teachers in Catalan education (Generalitat de Catalunya, 2018). This process was part of a training activity in which the students would analyze the results of the test to determine what the factors of TDC are and their level of competence. The activity was compulsory, and the questionnaire was filled in by all students who were guided by teachers and given group feedback at the end of the test to help them with the interpretation of the results. The procedure complied with the current data protection regulations. Students were shown a short text with the legal requirements, and they had to check a box to agree to participate. They also had to fill in their name and ID number on the first page of the questionnaire. To maintain the anonymity of respondents and ensure data protection, this data was deleted from the database and changed into “student n” identifiers.

The final sample consisted of 144 students (105 women and 39 men). The age range of the sample was between 17 and 45 years, with an average of 20.12 years and a standard deviation of 4.26. Of the total sample, 46 (23.4%) students had come from vocational education, 91 (46.2%) from university entrance exams, 6 were mature students (over 25 years old), and only 1 person had accessed through the exam for people over 45 years old. Table 5 shows the average TDC dimensions and the standard deviation.

Instruments

COMDID-A measures student perceptions of their own TDC divided into four factors: 1. Personal and professional, 2. Planning, organization and management of DT spaces and resources; 3. Teaching curriculum and methodology; 4. Relationships, ethics and security. The instrument consists of 22 items on a Likert scale (1. Very Low to 10. Very High). Four levels of competence were defined: Level 1: The teacher uses digital technologies as facilitators and elements for improving the teaching process. Example: The novice teacher or initial teacher trainee who includes digital technologies in teaching processes. Level 2: The teacher uses digital technologies to improve the teaching process flexibly and adapted to the educational context. Example: The teacher with two or more years of experience who uses and manages the technological resources and spaces of the classroom and the school and adapts them to possible needs. Level 3: The teacher uses digital technologies efficiently to improve students’ academic results, their educational action and the quality of the school. Example: a teacher who is a model or leader at the school in the use of digital technologies. Level 4: The teacher uses digital technologies, researches how to use them to improve teaching processes and draws conclusions to respond to the needs of the education system. Example: a committed teacher who constantly reflects and systematically analyzes their practice to discover new uses of technology in education and shares the results of their research in the professional networks with the intention of generating knowledge. Demographic data such as age, gender, educational level, current course and university was also collected.

Data Analysis

In order to answer the first research question (factor structure and construct validity), a Principal Component Analysis (PCA) was performed on the set of 22 observed variables or items to reduce the amount of data and identify a group of 4 factors or dimensions. A Varimax rotation was applied (since TDC factors are orthogonal, see table 3) following Hair and colleagues (2014). This rotation method is better than others in terms of simplifying the data columns of the Factor Matrix, so it provides a simplified factor structure that is easier to understand. The sample size was sufficient to drive the analysis (according to Hair and colleagues, there should be 5 observations per item, which means a minimum sample of 110 students for our instrument). The internal consistency of each factor was also analyzed using Cronbach’s alpha, and the second research question – relationships between the dimensions of COMDID-A, and the variables age and gender– was measured with Pearson’s correlation coefficient. Data analysis was conducted with the statistical software SPSS (V22.0).

RESULTS

Psychometric properties and factorial structure of COMDID-A

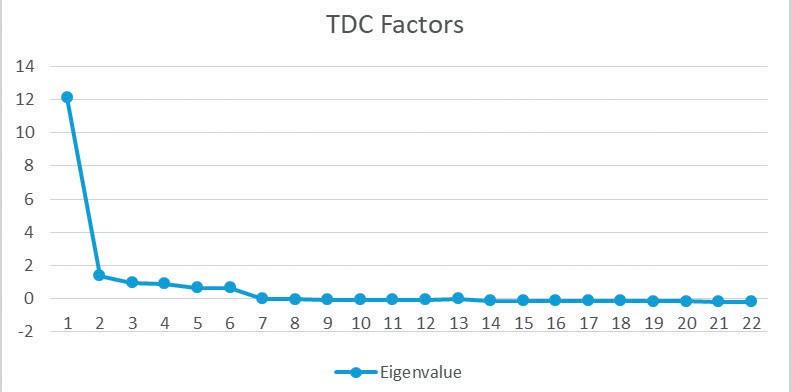

According to the process of construction and theoretical validation of the tool, we aim to confirm the structure of 4 dimensions with the use of PCA. The sedimentation graph (Figure 1) indicates that four factors were viable according to the fall contrast criterion (Cattell, 1966).

Figure 1

Sedimentation graph

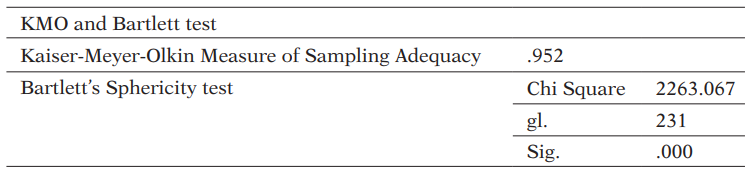

The PCA shows that the sample suitability is very good: the Kaiser-Meyer-Olkin (KMO) sampling adequacy index was .924 (see table 2). In the sample of students used we found a total of four factors or dimensions. Bartlett’s sphericity test ensures that if the critical level is greater than .05, we cannot reject the null hypothesis of sphericity, and we adjust the variables by means of the ideal factor analysis.

Eigenvalues of the components extracted

The matrix obtained in the correlation analysis (with sample adjustment coefficients for each variable) shows low correlation coefficients, which again confirms that the PCA is adequate for the study variables. In addition, the 4 factors explain 69.54% of the variance (above the 60% proposed by Hair and colleagues (2014) with the first factor explaining 54% and the rest more than 4% each). The eigenvalues of these 4 components are also greater than .8, which complies with the most commonly used criterion: when the percentage of total variance explained for each component reaches a cumulative percentage that is considered high, usually about eighty percent, the number of factors is sufficient.

Statistics for the study of the sample suitability of the model

Interpretation of the components

To make interpretation easier, and as we have mentioned above, we used Varimax rotation which converged in 8 iterations. This indicates that the extraction was carried out by four components. As we have seen above, we managed to obtain more than 69% of the explained variance with this number of components. For a better understanding, table 3 shows the rotated components that are already ordered by the factors (or dimensions) of our instrument. We erased all the weights of the items that were not greater than 0.45, again following the criterion quoted by Hair and colleagues (2014), which specifies that for a sample between 120 and 150 people, this is the minimum factor weight of the items included in a factor. To measure internal reliability, we used Cronbach’s Alpha coefficient. Dimension 1 (6 items), Personal and professional, is highly reliable (α = .885). Dimension 2 (5 items), Planning, Organization, and Management of DT spaces and resources, is also reliable (α = .889). Dimension 3 (5 items), Teaching Curriculum and methodology, can be considered to be very good (α = .844), and Dimension 4 (6 items), Relationships, ethics and security, is the most reliable of all (α = .906). If we remove an item from any of the dimensions, the value of this indicator decreases, so we used this composition for our instrument. The total number of items is 22 and the total Cronbach Alpha of the instrument is α = .896.

Analysis of correlations: age and gender

The students in the sample scored highest on D3. Relational aspects, ethics and security, followed by D4. Personal and professional, and lowest on the two pedagogical dimensions: D2. Planning, and D1. Didactics, curriculum and methodology. There are, however, no significant differences between the scales, and they all correlate positively and significantly with each other (see table 4). To analyze the relationship between age and each of the four TDC dimensions in the students in our sample, we calculated the Pearson coefficient. The results show that the students’ age correlates significantly with dimensions D2. Planning, organization and management of digital technological resources and spaces, r (142) = -.29, p < .01; D4. Personal and professional r (142) = -.33, p < .01, and D3. Relational, ethics and security r (142) = -.25, p < .01. However, with D1. Didactics, curriculum and methodology, it only correlates at the level p < .05, r (142)= -.21 (see Table 4). All the correlations indicate a negative relationship; that is, that the younger students are, the higher they self-evaluate their competence in TDC.

Rotated component matrix (Varimax). Extraction method: PCA. Rotation converged in 8 iterations

Finally, gender does not correlate significantly with any of the four dimensions. This means that there are no gender differences in TDC in our sample.

Pearson Correlations (* p < .05; **p < .01)

DISCUSSION

This article aimed to study COMDID-A as a measure of the level of TDC among initial teacher training students. The PCA and Cronbach Alpha results are very good in terms of validity and show that this tool can now be applied to other samples to continue with the external validation. They also enable us to determine whether to include the items that do not have enough weight in any of the four factors found. These factors are the same as those defined by theory (Lázaro & Gisbert, 2015; Lázaro et al., 2018).

We shall now go on to discuss this part of the validity process per dimension. For D1. Teaching curriculum and methodology, all the items are within the theoretical dimension to which they were assigned in the processes of instrument creation and validation by experts. However, item 1.5: “When teaching, I include the guidelines of the educational institution for the integration of digital technologies in the classroom.” also scores high on D2. This shows that student teachers believe that including these guidelines in the programming has to be planned. Currently, COMDID-A is being applied in several samples of in-service teachers in Catalonia to study whether this item should be accepted or not. In D2. Planning, organization and management, there are two items that were originally in D4. Item 4.5 “I train myself by doing activities related to digital technologies.” seems to be understood as an organizational task rather than as an aspect of training in itself. In fact, the components of DT organization and management can be found as content in all teaching training activities. However, improving TDC in permanent teacher training has become one of the priorities for the professional development of practicing teachers (Redecker & Punie, 2017; INTEF, 2017). We believe that placing the item in D4, which deals with personal and professional development, is therefore justified. Item 4.3: “I use digital technologies with students, and I am a reference for using digital technology” seems to be understood by students as the organization and management of DT, rather than as a personal issue. This item is related to the role of leadership and being a model. Fundamental in the teaching profession (Torrance & Forde, 2017), it is closely connected to personal abilities like communication, motivation, critical thinking, empathy, and personal safety. However, taking on the responsibility of being a reference or leader necessarily involves the ability to organize and manage digital technological resources, which implies being a source of inspiration for students (at the lowest level) and for colleagues (at a more advanced level), and is part of teachers’ personal and professional development (Redecker & Punie, 2017). Item 3.2. “I promote the access and use of digital technologies by all students with the intention of compensating for inequalities” on D2 second as well as D3. The first part of this item has more weight than the second. The guarantee of access to technology to all the students implicitly involves digital inclusion and the compensating function of education (Lázaro et al., 2015). Last but not least, item 2.4. “I follow the guidelines that schools prepare for teachers on the use of digital technologies in teaching” scored low on all dimensions. We believe that this item has to do with the relational part of the school, which is why the highest factor is D3. The item deals with the guidance and regulatory function of the documents of educational institutions, which are part of their educational project and autonomy (Generalitat de Catalunya, 2018). The scale D3. Relationship, ethics and security has the lowest reliability, but according to Hair et al. (2014) it is still very good. The first part of item 3.2 “I promote the access and use of digital technologies by all students with the intention of compensating for inequalities” has more weight than the second part.

Access to technology must be guaranteed as part of the compensatory and regulatory function of inequalities that all schools and the education system in general must have (Lázaro et al., 2015). This is closely connected to D3. Finally, the scale D4. Personal and professional has the highest internal reliability. However, there is one item (3.5: “I access the contents distributed in different digital spaces of the educational center and comment on them [blogs, virtual environments, social networks, etc.])” that should be in D3 but in the PCA analysis is, in fact in, D4. We believe that this is because the personal part of social networks has more weight in this sentence. But when the instrument was constructed, this item was placed in D3 because the digital spaces of the educational center and their contents must be an institutional strategy (frequency of publication, recipients, objectives, communication strategy linked to projects, etc.) (Fundació Jaume Bofill, 2016). We argue that item 3.5 should remain in D3 because we agree with Marthese and Shu-Nu Chang (2017) that the responsible and ethical use of technology can be modeled, discussed and practiced by teachers but they need to be aware of DT and the new practices that they entail. Promoting the use of the digital spaces of the center should be part of an institutional communication and visibility strategy towards the outside (European Commission, 2018a). According to Marthese and Shu-Nu Chang (2017), the responsible and ethical use of technology can be modeled, discussed and practiced by teachers but they need to be aware of DT and the new practices that they entail. Therefore, this indicator should be in D3.

We will now move on to study the correlation of age, gender and university access with students’ TDC in order to answer our study’s second question. In our sample, age correlates significantly and negatively with D2, D4 and D3. The older the student, the lower the self-evaluation in three of the four dimensions, and average values are high. This may be related to the over-confidence with which future teachers approach DTs in general: younger people use technology in a more natural way, which is one of the characteristics of present-day students (Kumar & Vigil, 2011), and of self-evaluation of TD in particular (Esteve, 2015). However, as reported by Roig and colleagues (2015), there is another aspect of age that should be taken into account: the number of years of teaching also correlates with factors of TD use. This contrasts with Prensky’s (2001) postulates about digital natives, according to which young people tend to use TD more and better. In teaching practice, it seems clear that experience and age will determine the awareness and sureness with which teachers naturally appropriate and incorporate DTs (Area et al., 2016) into their daily activities. In agreement with Vera et al. (2014), these results show that they have an acceptable level of basic TDC, but do not have an acceptable level of applying DT to teaching or of the digital strategies necessary for their own professional development. This also coincides with Cabero (2013), who concludes that teachers perceive that they are more than able to use DT in classrooms because they know several Office applications for work in class. However, they have little digital command of specific tools, for example, for designing online activities to complement or support teaching processes. Therefore, there is a need for specific training in these areas.

Gender does not correlate significantly with the self-perceived level of TDC. Following Björk and colleagues (2018), Maltese male teachers claimed to be more confident in their use of DT than female teachers. Furthermore, research has found that men tend to over-report their own DT skills ( Hargittai & Shafer 2006). This suggests that women judge themselves more strictly than men. In our case it seems that they assess themselves in a similar way, because in the teaching profession (non-university compulsory education system) women have the same responsibility for their pupils as men do. In addition, the women evaluated were trained in the use of DT. The more experience women have in the use of DT, the more positive their attitudes and self-confidence are (Teo, 2008). Even so, in their study on autonomy in learning, Fazey and Fazey (2001) found that gender was less relevant to the self-perception of competence of first year university students.

Although we have reported one particular tool for the self-assessment of TDC, the only way TDC can be measured and understood to be the complex process that it is will be to use a wide variety of different tools This study may help initial teacher training institutions by providing them with a valid and reliable assessment tool that complements the information from the curriculum and helps make proposals for reviewing and improving initial teacher training curricula. Furthermore, action can be taken to improve the training of future teachers on the basis of specific data obtained from the self-evaluation process of each dimension. The results show that initial teacher training should include strategies on how to manage information from the Internet, how to search for and select information, and how to communicate it to others (Spiteri & Rundgren, 2017). In order to accept the new roles and ways of teaching, future teachers must reflect and adopt the new models of teaching and learning.

CONCLUSIONS

In summary, COMDID-A is a valid and reliable instrument for evaluating TDC in our sample. It is also valid and reliable in terms of ethics and the replicability of the experience presented. In addition, implementing COMDID-A in initial teacher training studies can help students to become more aware about their learning through formative assessment. The individual report that students receive immediately after they finish the self-assessment questionnaire could be seen by the teacher as the starting point for helping students to recognize their individual shortcomings and orienting learning towards overcoming the main weak points detected by the evaluation process.

To extend our study in the future, it would be interesting to use a bigger sample, since although it was big enough to calculate the internal reliability and validity of the instrument, running a confirmatory factor analysis (CFA) requires a minimum of 500 responses. This would help us evaluate the most debated items and enable the instrument to be applied to curricula around Europe, and the external validity of the instrument to be further explored. The instrument also needs to be studied in samples of in-service teachers and in relation to other demographic information. It may be interesting to continue studying causal models that would allow us to take a step forward in terms of curricular design. As mentioned above, the process of evaluating and developing TDC is complex and, as well as using the self-evaluation tool reported, we shall also have to help and train students to use DC better and more accurately. This means that they have to be sufficiently digitally competent to know how and when to use digital resources in their teaching practice. The authors are already working in this direction with the design of COMDID-C as a validated tool that evaluates TDC, helps triangulate qualitative data from interviews, complements COMDID-A with various other techniques (Lázaro et al., 2019) and relates TDC to the real use of DT in the classroom. We believe that the work presented in this article is a valid contribution to evaluating TDC in the context of initial teacher training. Starting from certain subjects on the undergraduate curriculum, training in TDC should envisage reflection on professional practice as a fundamental part of the process. Formative assessment has a fundamental role in the learning process of future teachers. This process of reflecting on daily teaching experiences, as real or simulated problems involving the use of DT, will allow teachers to improve their TDC (Carrera et al., 2019; Pozos & Tejada, 2018).

Acknowledgments

Study carried out as part of the project ACEDIM (Ref. 2017ARMIF00031).

REFERENCES

Area, M., Hernández, V., y Sosa, J.J. (2016). Modelos de integración didáctica de las TIC en el aula. Comunicar, 47, 79-87. https://doi.org/10.3916/C47-2016-08

Banister, S. & Reinhart, R. (2012). Assessing NETS-T performance in teacher candidates: Exploring the Wayfind teacher assessment. Journal of Digital Learning in Teacher Education, 29(2), 59-65. https://doi.org/10.1080/21532974.2012.10784705

Björk Gudmundsdottir, G. & Hatlevik, O.E. (2018). Newly qualified teachers’ professional digital competence: implications for teacher education. European Journal of Teacher Education, 41(2), 214-231. http://dx.doi.org/10.1080/02619768.2017.1416085

Cabero, J. (2013). El aprendizaje autorregulado como marco teórico para la aplicación educativa de las comunidades virtuales y los entornos personales de aprendizaje. Education in the Knowledge Society, 14(2), 133-156. http://dx.doi.org/10.14201/eks.10217

Carrera, F. X., Coiduras, J. Lázaro, J.L., y Pérez, F. (2019). La competencia digital docente: definición y formación del profesorado. En M. Gisbert, V. Esteve-González, y J.L. Lázaro-Cantabrana (Eds.) ¿Cómo abordar la educación del futuro? Conceptualización, desarrollo y evaluación desde la competencia digital docente (pp. 59-78). Octaedro.

Catell, R.B. (1966). The scree test for number of factors. Multivariate Behavioral Research, 1, 245-276. http://dx.doi.org/10.1207/s15327906mbr0102_10

Cosi, A., Voltas, N., Lázaro-Cantabrana, J.L., Morales, P., Calvo, M., Molina, S., & Quiroga, M.A. (2020). Formative assessment at university through digital technology tools. Profesorado, revista de currículum y formación del profesorado, 24(1), 164-183. http://dx.doi.org/10.30827/profesorado. v24i1.9314

Esteve, F. (2015). La competencia digital docente. [Doctoral dissertation], Universitat Rovira i Virgili. http://hdl.handle.net/10803/291441

European Commission. (2018a). DigCompOrg: Digitally Competent Educational Organisations. https://bit.ly/2AM8gQH

European Commission. (2018b). Proposal for a council recommendation on key competences for lifelong learning. https://bit.ly/3dnSnhA

Fazey, D. & Fazey J. (2001). The Potential for Autonomy in Learning: Perceptions of competence, motivation and locus of control in first-year undergraduate students. Studies in Higher Education, 26(3), 345-361. http://dx.doi.org/10.1080/03075070120076309

Fundació Jaume Bofill (2016). Aliances per a l’èxit educatiu: Orientacions per desenvolupar un projecte Magnet. DL: B 16977-2016. https://bit.ly/3hDCIwK

Generalitat de Catalunya (2018). Competència digital docent del professorat de Catalunya. Departament d’Ensenyament. https://bit.ly/37AJH5f

Gisbert, M. y Johnson, L. (2015). Educación y tecnología: nuevos escenarios de aprendizaje desde una visión transformadora. RUSC. Universities and Knowledge Society Journal, 12(2), 1-14. http://dx.doi.org/10.7238/rusc.v12i2.2570

Hargittai, E. & Shafer, S. (2006). Differences in Actual and Perceived Online Skills: The Role of Gender. Social Science Quarterly, 87(2), 432-448. http://dx.doi.org/10.1111/j.1540-6237.2006.00389.x

Hair, J.F., Black, W.C., Babin, B.J., & Anderson, R. E. (2014). Multivariate data analysis. Pearson Education.

Hosein, A., Ramanau, R., & Jones, C. (2010). Learning and Living Technologies: A Longitudinal Study of First Year Students’ Frequency and Competence in the Use of ICT. Learning Media and Technology, 35(4) 403-418. http://dx.doi.org/10.1080/17439884.2010.529913

INTEF (2017). Marco Común de Competencia Digital Docente. https://bit.ly/3hyB6Vb

Kumar, S., & Vigil, K. (2011). The net generation as preservice teachers: Transferring familiarity with new technologies to educational environments. Journal of Digital Learning in Teacher Education, 27(4), 144-153. http://dx.doi.org/10.1080/21532974.2011.10784671

Lázaro, J.L., Estebanell, M., & Tedesco, J.C. (2015). Inclusion and Social Cohesion in a Digital Society. RUSC. Universities and Knowledge Society Journal, 12(2), 44-58. http://dx.doi.org/10.7238/rusc.v12i2.2459

Lázaro, J.L. y Gisbert, M. (2015). Elaboración de una rúbrica para evaluar la competencia digital del docente. UTE. Revista de Ciències de l’Educació, 1, 30–47. https://doi.org/10.17345/ute.2015.1.648

Lázaro, J., Gisbert, M., y Silva, J. (2018). Una rúbrica para evaluar la competencia digital del profesor universitario en el contexto latinoamericano. Edutec. Revista Electrónica de Tecnología Educativa, 0(63), 1-14 (378). https://doi.org/10.21556/edutec.2018.63.1091

Lázaro, J.L., Usart, M., & Gisbert, M. (2019). Assessing Teacher Digital Competence: the Construction of an Instrument for Measuring the Knowledge of Pre Service Teachers. Journal of New Approaches in Educational Research, 8(1), 73-78. https://doi.org/10.7821/naer.2019.1.370

Marthese S. & Shu-Nu Chang. (2017). Maltese primary teachers’ digital competence: implications for continuing professional development. European Journal of Teacher Education, 40(4), 521-534. https://doi.org/10.1080/02619768.2017.1342242

Papanikolaou, K., Makri, K., & Roussos, P. (2017). Learning design as a vehicle for developing TPACK in blended teacher training on technology enhanced learning. International Journal of Educational Technology in Higher Education, 14(1), 34-41. https://doi.org/10.1186/s41239-017-0072-z

Prensky, M. (2001). Digital natives, digital immigrants, part 2: Do they really think differently? On the Horizon, 9(6), 1-6

Pozos, K.V. y Tejada, J. (2018). Competencias digitales docentes en educación superior: niveles de dominio y necesidades formativas. Revista Digital de Investigación en Docencia Universitaria, 12(2), 59-87. http://dx.doi.org/10.19083/ridu.2018.712

Redecker, C. & Punie, Y. (2017). European Framework for the Digital Competence of Educators: DigCompEdu. Publications Office of the European Union.

Roig, R., Mengual, S. & Quinto, P. (2015). Primary Teachers’ Technological, Pedagogical and Content Knowledge. Comunicar, 45, 151-159. https://doi.org/10.3916/C45-2015-16

Sang, G., Valcke, M., van Braak, J., & Tondeur, J. (2010). Student teachers’ thinking processes and ICT integration: Predictors of prospective teaching behaviors with educational technology. Computers and Education, 54(1), 103-112. https://doi.org/10.1016/j.compedu.2009.07.010

Sanz-Ponce, J.R.; Hernando-Mora, I., y Mula-Benavent, J.M. (2015). La percepción del profesorado de Educación Secundaria en la Comunidad Valenciana acerca de sus conocimientos profesionales. Estudios sobre Educación, 29, 215-234. https://doi.org/10.15581/004.29.215-234

Slavich, G.M. & Zimbardo, P.G. (2012). Transformational teaching: theoretical underpinnings, basic principles, and core methods. Educational Psychology Review, 24(4), 569-608. https://doi.org/10.1007/s10648-012-9199-6

Spiteri, M. & Rundgren, S.N. (2017). Maltese primary teachers’ digital competence: implications for continuing professional development. European Journal of Teacher Education, 40(4), 521-534. https://doi.org/10.1080/02619768.2017.1342242

Teo, T. (2008). Pre-service teachers attitudes towards computer use: A singapore survey. Australasian Journal of Educational Technology, 24(4), 413-424. https://doi.org/10.14742/ajet.1201

Torrance, D. & Forde, C. (2017). Redefining what it means to be a teacher through professional standards: implications for continuing teacher education. European Journal of Teacher Education, 40(1), 110-126. https://doi.org/10.1080/02619768.2016.1246527

Tourón, J., Martín, D., Navarro, E., Pradas, S., & Iñigo, V. (2018). Construct validation of a questionnaire to measure teachers’ digital competence (TDC). Revista Española de Pedagogía, 76(269), 25-54. https://doi.org/10.22550/REP76-1-2018-02

Unesco (2019). Marco de competencias de los docentes en materia de TIC. https://bit.ly/3bkUp0u

Vera, J.A., Torres, L.E., y Martínez, E.E. (2014). Evaluación de competencias básicas en tic en docentes de educación superior en México. Píxel-bit. Revista de Medios y Educación, 143-155. https://doi.org/10.12795/pixelbit.2014.i44.10

Notas de autor

E-mail: mireia.usart@urv.cat

E-mail: joseluis.lazaro@urv.cat

E-mail: merce.gisbert@urv.cat